|

|

| National Information Center on Health Services Research and Health Care Technology (NICHSR) | |

HTA 101: III. PRIMARY DATA AND INTEGRATIVE METHODS

HTA embraces a diverse group of methods that can be grouped into two broad categories. Primary data methods involve collection of original data, ranging from more scientifically rigorous approaches such as randomized controlled trials to less rigorous ones such as case studies. Integrative methods (also known as "secondary" or "synthesis" methods) involve combining data or information from existing sources, including from primary data studies. These can range from quantitative, structured approaches such as meta-analyses or systematic literature reviews to informal, unstructured literature reviews. Cost analysis methods, which can involve one or both of primary data methods and integrative methods, are discussed separately a following section.

Most HTA programs use integrative approaches, with particular attention to formulating findings that are based on distinguishing between stronger and weaker evidence drawn from available primary data studies. Some HTA programs do collect primary data, or are part of larger organizations that collect primary data. It is not always possible to conduct, or base an assessment on, the most rigorous types of studies. Indeed, policies often must be made in the absence, or before completion, of definitive studies. There is no standard methodological approach for conducting HTA. Given their varying assessment orientations, resource constraints and other factors, assessment programs tend to rely on different combinations of methods. Even so, the general trend in HTA is to call for and emphasize the more rigorous methods.

Types of Methodological Validity

Validity refers to whether what we are measuring is what we intend to measure. Methodological designs vary in their ability to produce valid findings. Understanding different aspects of validity helps in comparing alternative methodological designs and interpreting the results of studies using those designs. Although these concepts are usually addressed in reference to primary data methods, they apply as well to integrative methods.

Internal validity refers to the extent to which the findings of a study accurately represent the causal relationship between an intervention and an outcome in the particular circumstances of an investigation. This includes the extent to which a study minimizes any systematic or non-random error in the data.

External validity refers to the extent to which the findings obtained from an investigation conducted under particular circumstances can be generalized to other circumstances. To the extent that the circumstances of a particular investigation (e.g., patient characteristics or the manner of delivering a treatment) differ from the circumstances of interest, the external validity of the findings of that investigation may be questioned.

Face validity is the ability of a measure to represent reasonably a construct (i.e., a concept or domain of interest) as judged by someone with expertise in the health problem and interventions of interest. Content validity refers to the degree to which a measure covers that range of meanings or dimensions of a construct. As noted above, an outcome measure is often used as a marker or surrogate for a disease of interest. For example, how well do changes in prostate specific antigen (PSA) levels represent or predict the risk of prostate cancer? How well does performance on an exercise treadmill represent cardiovascular fitness?

Construct validity is the ability of a measure to correlate with other accepted measures of the construct of interest, and to discriminate between groups known to differ according to the variable. Convergent validity refers to the extent to which two different measures that are intended to measure the same construct do indeed yield similar results. Discriminant validity, opposite convergent validity, concerns whether different measures that are intended to measure different constructs do indeed fail to be positively associated with each other. Concurrent validity refers to the ability of a measure to accurately differentiate between different groups at the time the measure is applied, or the correlation of one measure with another at the same point in time. Predictive validity refers to the ability to use differences in a measure to predict future events or outcomes.

Primary Data Methods

The considerable and diverse array of primary data methods includes, e.g., true experiments such as randomized controlled trials (RCTs) and other controlled trials; other prospective but uncontrolled trials; observational studies such as case-control, cross-sectional studies, and surveillance studies; and simpler designs such as case series and single case reports or anecdotes. These methods can be described and categorized in terms of multiple attributes or dimensions, such as whether they are prospective or retrospective, interventional or observational, controlled or uncontrolled, and other attributes noted below. Some of these methods have alternative names, and many studies employ nearly limitless combinations of these attributes.

Some Fundamental Attributes

Prospective studies are planned and implemented by investigators using real-time data collection. These typically involve identification of one or more patient groups, collection of baseline data, delivering one or more interventions, collecting follow-up data, and comparing baseline to follow-up data for the patient groups. In retrospective studies, investigators collect samples of data from past events (interventions and outcomes) involving one or more patient groups. In an interventional study, investigators prospectively deliver, manipulate, or manage the intervention(s) of interest. In an observational study investigators only monitor or follow an intervention or exposure (e.g., of a risk factor) of interest, but do not themselves intervene in the delivery the intervention or exposure.

Many studies use separate control groups of patients as a basis of comparison to the one or more groups receiving an intervention of interest. Some studies do not use control groups; these uncontrolled studies rely on comparing patient measures before and after an intervention to determine whether the intervention had an effect. Control groups of patients are constituted in ways to ensure as much similarity as possible to patients in intervention groups. Except for the intervention of interest, the treatment and management of control groups and intervention groups are as similar as possible. By comparing changes in intervention groups to changes in control groups, investigators seek to isolate the effect of an intervention on patient outcomes from any effects on patient outcomes from extraneous factors. While most controlled studies used contemporaneous controls alongside (i.e., identified and followed at the same time as) intervention groups, investigators sometimes use historical control groups. In a crossover design study, patients start in one group (intervention or control) and then are switched to the other, thereby acting as their own controls.

Various means are used to ensure that intervention and control groups comprise patients with similar characteristics, so that "baseline" (initial) differences in these groups will not affect (confound) the relative changes in patient outcomes between the groups. Although such means as alternate assignment or using birthdays or identification numbers are used to assign patients to intervention and control groups, assignment based on randomization is preferred because it minimizes opportunities for bias to affect the composition of these groups at baseline. Knowledge of assignment of patients to one group or another, e.g., to a group receiving a new intervention or a group receiving standard care, can itself affect outcomes as experienced by patients and/or assessed by investigators. Therefore, some studies employ blinding of patients, and sometimes of investigators and data analysts, to knowledge of patient assignment to intervention and control groups in an effort to eliminate the confounding effects of such knowledge.

Alternative Methods Offer Tradeoffs in Validity

Although primary study investigators and assessors would prefer to have methods that are both internally and externally valid, they often find that study design attributes that increase one type of validity jeopardize the other. A well designed and conducted RCT is widely considered to be the best approach for ensuring internal validity, as it gives investigators the most control over factors that could confound the causal relationship between the a technology and health outcomes. However, for the reasons that a good RCT has high internal validity, its external validity may be limited.

Most RCTs are designed to investigate the effects of an intervention in specific types of patients so that the relationship between the intervention and outcomes is less likely to be confounded by patient variations. However, findings from an RCT involving a narrowly defined patient group may not be applicable for the same intervention given to other types of patients. Patients allowed to enroll in RCTs are often subject to strict inclusion and exclusion criteria pertaining, e.g., to age, risk factors, and previous and current treatments. This is done for various reasons, including to avoid confounding the treatment effect of the intervention in question by previous or current other treatments, and to limit the extent to which variations in patients' response to a treatment might dilute the treatment effect across the enrolled patient population. As a result, the patient population in an RCT may be less likely to be representative of the desired or potential target population in practice. As noted above, RCTs often involve special protocols of care and testing that may not be characteristic of general care, and are often conducted in university medical centers or other special settings that may not represent the general or routine settings in which most health care is provided.

Findings of some large observational studies (e.g., from large cross-sectional studies or registries) have external validity to the extent that they can provide insights into the types of outcomes that are experienced by different patient groups in different circumstances. However, these less rigorous designs are more subject to certain forms of bias that threaten internal validity, diminishing the certainty with which particular outcomes can be attributed to an intervention. Interesting or promising findings from weaker studies can raise hypotheses that can be tested using stronger studies. The use of "large, simple trials" (discussed below) is an attempt to combine the strengths of RCTs and observational studies.

RCTs Not Best Design for All Questions

While RCTs are the "gold standard" of internal validity for causal relationships, they are not necessarily the best method for answering all questions of relevance to an HTA. As noted by Eisenberg (1999):

"Those who conduct technology assessments should be as innovative in their evaluations as the technologies themselves .... The randomized trial is unlikely to be replaced, but it should be complemented by other designs that address questions about technology from different perspectives."

Other types of studies may be preferred to RCTs for different questions. For example, a good way to describe the prognosis for a given disease or condition may be a set of follow-up studies of patient cohorts at uniform points in the clinical course of a disease. Case control studies are often used to identify risk factors for diseases, disorders, and adverse events. The accuracy of a diagnostic test (as opposed to its ultimate effect on health outcomes) may be determined by a cross-sectional study of patients suspected of having a disease or disorder. Non-randomized trials or case series may be preferred for determining the effectiveness of interventions for otherwise fatal conditions, i.e., where little or nothing is to be gained by comparison to placebos or known ineffective treatments. Surveillance and registries are used to determine the incidence of rare, serious adverse events that may be associated with an interventions. For incrementally modified technologies posing no known additional risk, registries may be appropriate for determining safety and effectiveness.

Collecting New Primary Data

It is beyond the scope of this document to describe the planning, design, and conduct of clinical trials, observational studies, and other investigations for collecting new primary data. There is a considerable and evolving literature on these subjects (Chow 1998; Spilker 1991; Spilker 1995; Rothenberg 2003). Also, there is a literature on priority setting and efficient resource allocation for clinical trials, and cost-effective design of clinical trials (Detsky 1990; Thornquist 1993).

As noted above, compiling evidence for an assessment may entail collection of new primary data. An assessment program may determine that existing evidence is insufficient for meeting the desired policy needs, and that new studies are needed to generate data for particular aspects of the assessment. Once available, the new data can be interpreted and incorporated into the existing body of evidence.

In the US, major units of the NIH such as the National Cancer Institute and the National Heart, Lung and Blood Institute sponsor and conduct biomedical research, including clinical trials. Elsewhere at NIH, the Office of Medical Applications of Research coordinates the NIH Consensus Development Program, but does not collect primary clinical data, although it occasionally surveys physician specialists and other groups for which the NIH assessment reports are targeted. The Veterans Health Administration (VHA) Cooperative Studies Program [http://www.research.va.gov/resdev/programs/csrd/csp.cfm] is responsible for the planning and conduct of large multicenter clinical trials within the VHA, including approximately 60 cooperative studies at any one time. The FDA does not typically conduct primary studies related to the marketing of new drugs and devices; rather, the FDA reviews primary data from studies sponsored or conducted by the companies that make these technologies.

The ability of most assessment programs to undertake new primary data collection, particularly clinical trials, is limited by such factors as programs' financial constraints, time constraints, responsibilities that do not include conducting or sponsoring clinical studies, and other aspects of the roles or missions of the programs. An HTA program may decide not to undertake and assessment if insufficient data are available. Whether or not an assessment involves collection of new primary data, the assessment reports should note what new primary studies should be undertaken to address gaps in the current body of evidence, or to meet anticipated assessment needs.

Health professional organizations and societies, e.g., the American College of Physicians and American College of Cardiology, work almost exclusively from existing data and do not do clinical research. Third-party payers generally do not sponsor clinical studies, but increasingly analyze claims data and other administrative data. Payers have supported trials of new technologies indirectly by paying for care associated with clinical studies of those technologies, or by paying unintentionally for uncovered new procedures that were coded as covered procedures. As noted above, some payers are providing conditional coverage for certain investigational technologies in selected settings in order to compile data that can be used to make more informed coverage decisions. One recent, controversial example, is the multicenter RCT of lung-volume reduction surgery, the National Emphysema Treatment Trial (NETT), funded by the NHLBI and the Centers for Medicare and Medicaid Services (CMS, which administers the US Medicare program) (Fishman 2003; Ramsey 2003).

Primary Data Collection Trends Relevant to HTA

Primary data collection methods have evolved in certain important ways that affect the body of evidence used in HTA. Among these, investigators have made progress in trying to combine some of the desirable attributes of RCTs and observational studies. For example, while retaining the methodological strengths of prospective, randomized design, "large, simple trials" use large numbers of patients, more flexible patient entry criteria and multiple study sites to improve external validity and gain effectiveness data. Also, fewer types of data may be collected for each patient, easing participation by patients and clinicians (Buring 1994; Ellenberg 1992; Peto 1993; Yusuf 1990). Examples of these approaches include large, simple trials supported by NIH; certain large, multicenter RCTs coordinated by the VA Cooperative Studies Program, and "firm trials" involving random assignment of patients and providers to alternative teams to evaluate organizational and administrative interventions (Cebul 1991).

Clinical trials conducted for the purposes of advancing biomedical research or for achieving market clearance by regulatory bodies approval do not necessarily address clinical choices or policy decisions (e.g., coverage policies). The call for "pragmatic" or "practical" clinical trials (PCTs) is intended to meet these needs more directly. Among their main attributes, PCTs (1) select clinically relevant alternative interventions to compare, (2) include a diverse population of study participants, (3) recruit participants from heterogeneous practice settings, and (4) collect data on a broad range of health outcomes. PCTs will require that clinical and health policy decision makers become more involved in priority setting, research design, funding, and other aspects of clinical research (Tunis 2003).

Biomedical research organizations such as NIH and regulatory agencies such as FDA permit certain mid-trial changes in clinical trial protocols such as drug dosage modifications and patient cross-overs to alternative treatment groups to reflect the most recent scientific findings. Selected use of surrogate endpoints, especially biological markers, is employed where these are known to be highly correlated with "hard endpoints" such as morbidity and mortality that may not occur until months or years later. For example, a long-standing surrogate marker for stroke risk is hypertension, although understanding continues to evolve of the respective and joint roles of systolic and diastolic pressures in predicting stroke (Basile 2002). Trials of new drugs for HIV/AIDS use such biological markers as virological (e.g., plasma HIV RNA) and immunological (e.g., CD4+ cell counts) levels (Lalezari 2003).

Streamlining or combining clinical trial phases and "parallel track" availability of technologies to patients outside of ongoing formal RCTs are intended to speed regulatory approval and make technologies available to patients who are ineligible for RCT protocols but have exhausted other treatments. For example, the FDA provides several types of access to investigational treatments. "Emergency use" is allowed in situations where there is a need for an investigational technology in a manner that is not consistent with the approved investigational protocol or by a physician who is not part of the clinical trial, and may occur before FDA approval of the investigational plan (e.g., IND for drugs or IDE for devices). "Compassionate" use allows access for patients who do not meet the requirements for inclusion in an ongoing clinical trial but for whom the treating physician believes the technology may provide a benefit; this usually applies to patients with a serious disease or condition for whom there is no viable alternative treatment. "Treatment use" refers to instances where data collected during a trial indicates that a technology is effective, so that during the trial or prior to full FDA review and approval for marketing, the technology may be provided to patients not in the trial, subject to the other requirements of the trial (e.g., under an IND for drugs or IDE for devices). "Continued access" allows continued enrollment of patients after the trial has been completed, in order to enable access to the investigational technology while the marketing application is being prepared by the sponsor or reviewed by the FDA. Although many of these adaptations were originally instituted for RCTs involving new drug treatments for HIV/AIDS, cancer, and other life-threatening conditions (Merigan 1990), their use in trials of treatments for other conditions is increasing.

Another important type of development in primary data collection is the incorporation of contemporaneous cost data collection in prospective clinical trials. Health care product companies increasingly are using such data in product promotion and to help secure favorable payment decisions (Anis 1998; Henry 1999). The generation of health and economic data are increasingly influenced by technical guidance for data submissions provided by national and regional HTA agencies, particularly in Canada, Europe, and Australia (Hill 2000; Hjelmgren 2001; Taylor 2002).

Integrative Methods

Having considered the merits of individual studies, an assessment group must begin to integrate, synthesize, or consolidate the available findings. For many topics in HTA, there is no single definitive primary study, e.g., that settles whether one technology is better than another for a particular clinical situation. Even where definitive primary studies exist, findings from different types of studies must be combined or considered in broader social and economic contexts in order to formulate policies.

Methods used to combine or integrate data include the following:

- Meta-analysis

- Modeling (e.g., decision trees, Markov models)

- Group judgment ("consensus development")

- Systematic literature review

- Unstructured literature review

- Expert opinion

The biases inherent in traditional means of consolidating literature (i.e., non-quantitative or unstructured literature reviews and editorials) are well recognized, and greater emphasis is given to more structured, quantified and better documented methods. The body of knowledge concerning how to strengthen and apply these integrative methods has grown substantially in recent years. Considerable work has been done to improve the validity of decision analysis and meta-analysis in particular (Eckman 1992; Eddy 1992; Lau 1992). Experience with the NIH Consensus Development Program, the panels on appropriateness of selected medical and surgical procedures conducted by the RAND Corporation, the clinical practice guidelines activities sponsored until the mid-1990s by the former AHCPR (renamed as AHRQ), and others continues to add to the body of knowledge concerning group judgment processes.

Three major types of integrative methods--meta-analysis, decision analysis, and consensus development--are described below.

Meta-analysis

Meta-analysis refers to a group of statistical techniques for combining results of multiple studies to obtain a quantitative estimate of the overall effect of a particular technology (or variable) on a defined outcome. This combination may produce a stronger conclusion than can be provided by any individual study (Laird 1990; Normand 1999; Thacker 1988). The purposes of meta-analysis are to:

- Encourage systematic organization of evidence

- Increase statistical power for primary end points

- Increase general applicability (external validity) of findings

- Resolve uncertainty when reports disagree

- Assess the amount of variability among studies

- Provide quantitative estimates of effects (e.g., odds ratios or effect sizes)

- Identify study characteristics associated with particularly effective treatments

- Call attention to strengths and weaknesses of a body of research in a particular area

- Identify needs for new primary data collection

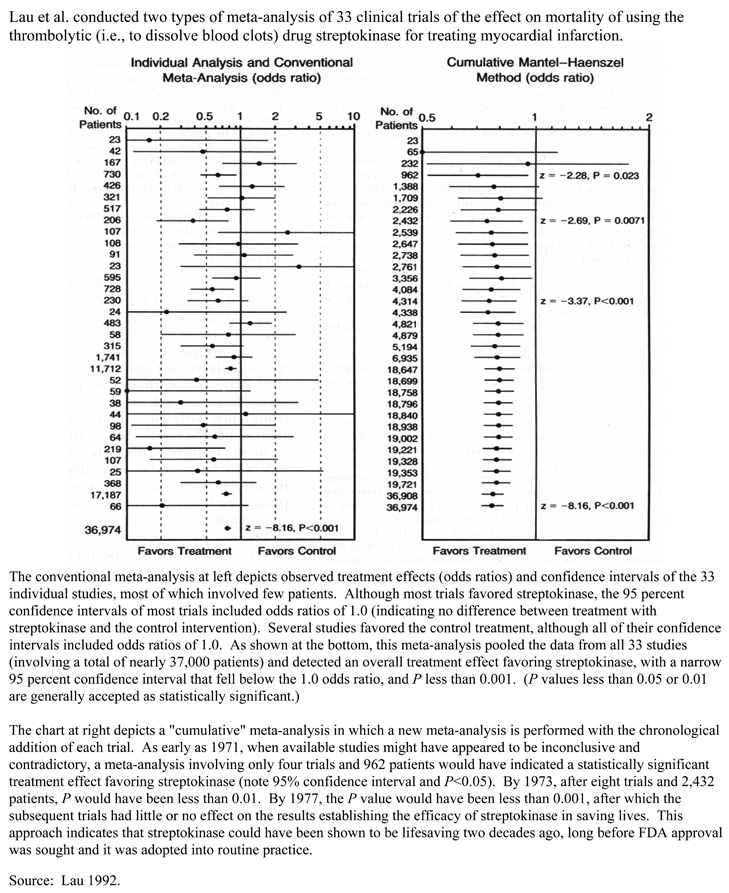

Meta-analysis typically is used for topics that have no definitive studies, including topics for which non-definitive studies are in some disagreement. Evidence collected for assessments often includes studies with insufficient statistical power (e.g., because of small sample sizes) to detect any true treatment effects. By combining the results of multiple studies, a meta-analysis may have sufficient power to detect a true treatment effect if one exists, or at least narrow the confidence interval about the mean treatment effect. Box 13 shows an example of a meta-analysis of thrombolytic therapy.

Although meta-analysis has been applied primarily for treatments, meta-analytic techniques are being extended to diagnostic technologies. As in other applications of meta-analysis, the usefulness of these techniques for diagnostic test accuracy is subject to publication bias and the quality of primary studies of diagnostic test accuracy (Deeks 2001; Hasselblad 1995; Irwig 1994; Littenberg 1993). Although meta-analysis is often applied to RCTs, it may be used for observational studies as well (Stroup 2000).

Meta-Analysis: Clinical Trials of Intravenous Streptokinase for Acute Myocardial Infarction

Source: Lau 1992.

The basic steps in meta-analysis are the following:

- Specify the problem of interest.

- Specify the criteria for inclusion of studies (e.g., type and quality).

- Identify all studies that meet inclusion criteria.

- Classify study characteristics and findings according to, e.g.: study characteristics (patient types, practice setting, etc.), methodological characteristics (e.g., sample sizes, measurement process), primary results and type of derived summary statistics.

- Statistically combine study findings using common units (e.g., by averaging effect sizes); relate these to study characteristics; perform sensitivity analysis.

- Present results.

Some of the particular techniques used in the statistical combination of study findings in meta-analysis are: pooling, effect size, variance weighting, Mantel-Haenszel, Peto, DerSimonian and Laird, and confidence profile method. The suitability of any of these techniques for a group of studies depends upon the comparability of circumstances of investigation, type of outcome variables used, assumptions about the uniformity of treatment effects and other factors (Eddy 1992; Laird 1990; Normand 1999).

The different techniques of meta-analysis have specific rules about whether or not to include certain types of studies and how to combine their results. Some meta-analytic techniques adjust the results of the individual studies to try to account for differences in study design and related biases to their internal and external validity. Special computational tools (e.g., computer software) may be required to make the appropriate adjustments for the various types of biases in a systematic way (Detsky 1992; Moher 1999; van Houwelingen 2002).

Meta-analysis can be limited by poor quality of data in primary studies, publication bias, biased selection of available studies, insufficiently comparable studies selected (or available) for a meta-analysis, and biased interpretation of findings. The quality of RCTs used in meta-analyses can bias results. The results of meta-analyses that are based on sets of RCTs with lower methodological quality tend to show greater treatment effects (i.e., greater efficacy of interventions) than those based on sets of RCTs of higher methodological quality (Moher 1998). However, it is not apparent that any individual quality measures are associated with the magnitude of treatment effects in meta-analyses of RCTs (Balk 2002). As is the case for RCTs, there are instruments for assessing the quality of meta-analyses and systematic reviews (Moher 1999), as shown in Box 14.

The shortcomings of meta-analyses, which are shared by unstructured literature reviews and other less rigorous synthesis methods, can be minimized by maintaining a systematic approach to meta-analysis. Performing meta-analyses in the context of systematic reviews, i.e., that have objective means of searching the literature and applying predetermined inclusion and exclusion criteria to the primary studies used, can diminish the impact of these shortcomings on the findings of meta-analyses (Egger 2001). Compared to the less rigorous methods of combining evidence, meta-analysis can be time-consuming and requires greater statistical and methodologic skills. However, meta-analysis is a much more explicit and accurate method.

Instrument to Assess the Quality of Meta-Analysis Reports

|

Heading |

Subheading |

Descriptor |

Reported Y/N |

Page Number |

|

Title |

Identify the report as a meta-analysis [or systematic review] of RCTs |

|||

|

Abstract |

Use a structured format |

|||

|

Describe |

||||

|

Objectives |

The clinical question explicitly |

|||

|

Data sources |

The databases (ie, list) and other information sources |

|||

|

Review methods |

The selection criteria (ie, population, intervention, outcome, and study design); methods for validity assessment, data abstraction, and study characteristics, and quantitative data synthesis in sufficient detail to permit replication |

|||

|

Results |

Characteristics of the RCTs included and excluded; qualitative and quantitative findings (ie, point estimates and confidence intervals); and subgroup analyses |

|||

|

Conclusion |

The main results |

|||

|

Describe |

||||

|

Introduction |

The explicit clinical problem, biological rationale for the intervention, and rationale for review |

|||

|

Methods |

Searching |

The information sources, in detail (eg, databases, registers, personal files, expert informants, agencies, hand-searching), and restrictions (years considered, publication status, language of publication) |

||

|

Selection |

The inclusion and exclusion criteria (defining population, intervention, principal outcomes, and study design |

|||

|

Validity assessment |

The criteria and process used (eg, masked conditions, quality assessment, and their findings) |

|||

|

Data abstraction |

The process or processes used (eg, completed independently, in duplicate) |

|||

|

Study characteristics |

The type of study design, participants' characteristics, details of intervention, outcome definitions, &c, and how clinical heterogeneity was assessed |

|||

|

Quantitative data synthesis |

The principal measure of effect (eg, relative risk), method of combining results (statistical testing and confidence intervals), handling of missing data; how statistical heterogeneity was assessed, a rationale for any a-priori sensitivity and subgroup analyses; and any assessment of publication bias |

|||

|

Results |

Trial Flow |

Provide a meta-analysis profile summarizing trial flow |

||

|

Study characteristics |

Present descriptive data for each trial (eg, age, sample size, intervention, dose, duration, follow-up period) |

|||

|

Quantitative data synthesis |

Report agreement on the selection and validity assessment; present simple summary results (for each treatment group in each trial, for each primary outcome); present data needed to calculate effect sizes and confidence intervals in intention-to-treat analyses (eg, 2x2 tables of counts, means and SDs, proportions) |

|||

|

Discussion |

Summarise key findings; discuss clinical inferences based on internal and external validity; interpret the results in light of the totality of available evidence; describe potential biases in the review process (eg, publication bias); and suggest a future research agenda |

Source: Moher 1999.

Even though many assessments still tend to rely on overall subjective judgments and similar less rigorous approaches of integrating evidence, there is a clear trend toward learning about and using more meta-analytic approaches. An assessment group that uses the inclusion/exclusion rules and other stipulations of meta-analysis is likely to conduct a more thorough and credible assessment, even if the group decides not to perform the final statistical consolidation of the results of pertinent studies.

More advanced meta-analytic techniques are being applied to assessing health technologies, e.g., involving multivariate treatment effects, meta-regression, and Bayesian methods (van Houwelingen 2002). As meta-analysis and other structured literature syntheses are used more widely in evaluating health care interventions, methodological standards for conducting and reporting meta-analyses are rising (Egger, Davey Smith 2001, Moher 1999, Petitti 2001).

Modeling

Quantitative modeling is used to evaluate the clinical and economic effects of health care interventions. Models are used to answer "What if?" questions. That is, they are used to represent (or simulate) health care processes or decisions and their impacts under conditions of uncertainty, such as in the absence of actual data or when it is not possible to collect data on all potential conditions, decisions, and outcomes. For example, decision analytic modeling is used to represent the sequence of clinical decisions and its health and economic impacts. Economic modeling can be used to estimate the cost-effectiveness of alternative technologies for a given health problem.

By making informed adjustments or projections of existing primary data, modeling can help account for patient conditions, treatment effects, and costs that are not present in primary data. This may include bridging efficacy findings to estimates of effectiveness, and projecting future costs and outcomes.

Among the main types of techniques used in quantitative modeling are decision analysis (described below), Markov model process, Monte Carlo simulation, survival and hazard functions, and fuzzy logic (Tom 1997). A Markov model (or chain) is a way to represent and quantify changes from one state of health to another. A Monte Carlo simulation uses sampling from random number sequences to assign estimates to parameters with multiple possible values, e.g., certain patient characteristics (Caro 2002; Gazelle 2003).

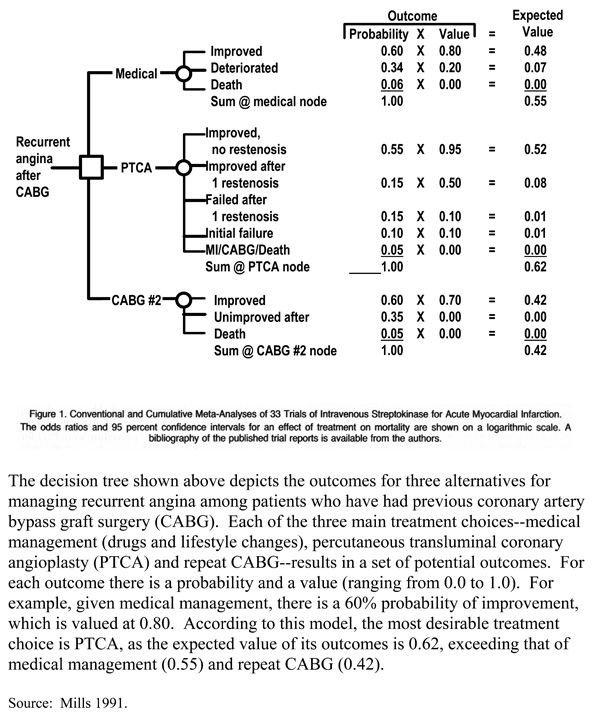

Decision analysis uses available quantitative estimates to represent (model or simulate) the sequences of alternative strategies (e.g., of diagnosis and/or treatment) in terms of the probabilities that certain events and outcomes will occur and the values of the outcomes that would result from each strategy (Pauker 1987; Thornton 1992). Decision models often are shown in the form of "decision trees" with branching steps and outcomes with their associated probabilities and values. Various software programs may be used in designing and conducting decision analyses, accounting for differing complexity of the strategies, extent of sensitivity analysis, and other quantitative factors.

Decision models can be used in different ways. They can be used to predict the distribution of outcomes for patient populations and associated costs of care. They can be used as a tool to support development of clinical practice guidelines for specific health problems. For individual patients, decision models can be used to relate the likelihood of potential outcomes of alternative clinical strategies, and/or to identify the clinical strategy that has the greatest utility for a patient. Decision models are also used to set priorities for HTA (Sassi 2003).

The basic steps of decision analysis are:

- Develop a model (e.g., a decision tree) that depicts

the set of important choices (or decisions) and potential

outcomes of these choices. For treatment choices, the

outcomes may be health outcomes (health states); for

diagnostic choices, the outcomes may be test results (e.g.,

positive or negative).

- Assign estimates (based on available literature) of the probabilities (or magnitudes) of each potential outcome given its antecedent choices.

- Assign estimates of the value of each outcome to

reflect its utility or desirability (e.g., using a HRQL

measure or QALYs).

- Calculate the expected value of the outcomes associated with the particular choice(s) leading to those outcomes. This is typically done by multiplying the set of outcome probabilities by the value of each outcome.

- Identify the choice(s) associated with the greatest

expected value. Based on the assumptions of the decision

model, this is the most desirable choice, as it provides

the highest expected value given the probability and value

of its outcomes.

- Conduct a sensitivity analysis of the model to determine if plausible variations in the estimates of probabilities of outcomes or utilities change the relative desirability of the choices. (Sensitivity analysis is used because the estimates of key variables in the model may be based on limited data or simply expert conjecture.)

Box 15 shows an example of a decision tree for alternative therapies for managing recurrent angina following coronary artery bypass graft surgery. A limitation of modeling with decision trees is representing recurrent health states (e.g., recurrent complications or stages of a chronic disease). An alternative approach is to use state-transition models that use probabilities of moving from one state of health to another, including remaining in a given state or returning to it after intervening health states. Markov modeling is a commonly used type of state-transition modeling.

The assumptions and estimates of variables used in models should be validated against actual data as it becomes available, and the models should be modified accordingly. Modeling should incorporate sensitivity analyses to quantify the conditional relationships between model inputs and outputs.

Models and their results are only aids to decision-making, not statements of scientific, clinical, or economic fact. The report of any modeling study should carefully explain and document the assumptions, data sources, techniques, and software. Modelers should make clear that the findings of a model are conditional upon these components. The use of decision modeling in cost-effectiveness analysis in particular has advanced in recent years, with development of checklists and standards for these applications (Gold 1996; Soto 2002; Weinstein 2003).

Consensus Development

In various forms, group judgment or consensus development is used to set standards, make regulatory recommendations/decisions, make payment recommendations/policies, make technology acquisition decisions, formulate practice guidelines, define the state-of-the-art, and other purposes. "Consensus

Decision Tree: Management of Angina After Coronary Artery Bypass Surgery

Source: Mills 1991.

development" can refer to discrete group processes or techniques that contribute to an assessment, such as the nominal group technique or Delphi method; it also can refer to particular consensus development approaches (e.g., the consensus development conferences conducted by the NIH).

In contrast to the quantitative synthesis methods of meta-analysis and decision analysis, consensus development is generally qualitative in nature. It may be unstructured and informal, or it may involve formal group methods such as the nominal group technique and Delphi technique (Fink 1984; Gallagher 1993; Jairath 1994). Although these processes typically involve face-to-face interaction, someconsensus development efforts combine remote, iterative interaction of panelists (as in the formal Delphi technique) with face-to-face meetings. Computer conferencing and related telecommunications approaches also are used. There is a modest but growing body of literature on consensus development methods in HTA. For example, one review examined the factors affecting the findings of these processes, including selection of topics and questions, selection of participants, choosing and preparing the scientific evidence, structuring the interaction among participants, and methods for synthesizing individual judgments. Findings and associated support regarding methods of synthesizing individual judgments into consensus are summarized in Box 16.

Consensus Development: Findings and Associated Support Regarding Methods of Synthesizing Individual Judgments

- An implicit approach to aggregating individual judgments may be adequate for establishing broad policy guidelines. More explicit methods based on quantitative analysis are needed to develop detailed, specific guidelines. [C]

- The more demanding the definition of agreement, the more anodyne [bland or noncontroversial] the results will be. If the requirement is too demanding, either no statements will qualify or those that do will be of little interest. [C]

- Differential weighting of individual participants' views produces unreliable results unless there is a clear empirical basis for calculating the weights. [B]

- The exclusion of individuals with extreme views (outliers) can have a marked effect on the content of guidelines. [A]

- There is no agreement as to the best method of mathematical aggregation. [B]

- Reports of consensus development exercises should include an indication of the distribution or dispersal of participants' judgments, not just the measure of central tendency. In general, the median and the inter-quartile range are more robust than the mean and standard deviation. [A]

The extent to which research support exists for any conclusion is indicated, although these should not necessarily be considered as a hierarchy: A = clear research evidence; B = supporting research evidence; C = experienced common-sense judgment.

Source: Murphy 1998.

Virtually all HTA efforts involve consensus development at some juncture, particularly to formulate findings and recommendations. Consensus development also can be used for ranking, such as to set assessment priorities, and rating. For example, RAND has used a two-stage modified Delphi process (first stage, independent; second stage, panel meeting) in which expert panels rate the appropriateness of a procedure (e.g., tympanostomy tubes for children) for each of many possible patient indications on a scale of 1.0 (extremely inappropriate) to 9.0 (extremely appropriate) (Kleinman 1994).

The opinion of an expert committee concerning, e.g., the effectiveness of a particular intervention, does not in itself constitute strong evidence. Where the results of pertinent, rigorous scientific studies exist, these should take precedence. In the absence of strong evidence, and where practical guidance is needed, expert group opinion can be used to infer or extrapolate from the limited available evidence. Where many assessment efforts are deficient is not making clear where the evidence stops and where the expert group opinion begins.

Consensus development programs typically embrace most of the steps of HTA described here. In these programs, the consensus development conference usually spans at least three of the HTA steps: interpret evidence, integrate evidence, and formulate findings and recommendations. Increasingly, consensus development efforts start with presentations of previously compiled evidence reports.

Many current consensus development programs in the US and around the world are derived from the model of consensus development conference originated at the US NIH in 1977 as part of an effort to improve the translation of NIH biomedical research findings to clinical practice. NIH has modified and experimented with its process over the years. As of late 2003, NIH had held 120 consensus development conferences. (NIH also has conducted about 25 state-of-the-science conferences, using a similar format to its consensus development conferences.) Australia, Canada, Denmark, France, Israel, Japan, The Netherlands, Spain, Sweden and the UK are among the countries that use various forms of consensus development programs to evaluate health technologies. Consensus conferences also are held jointly by pairs of countries and by international health organizations. Although they originally may have been modeled after the US program, these programs have evolved to meet the needs of their respective national environments and sponsoring organizations (McGlynn 1990).

The variety in consensus development programs can be described and compared along several main types of characteristics, as follows.

- Context of the process: e.g., intended audience, topics and impacts addressed, topic selection

- Pre-panel preparation: e.g., responsibility for planning, evidence preparation, prior drafting of questions and/or recommendations

- Panel composition: e.g., panel size, selection, types of expertise/representation, characteristics of chairperson

- Consensus conference attributes: e.g., length of conference, public involvement, private panel sessions, definition of consensus, decision-making procedures (such as voting), process for handling disagreement, format and dissemination of final product

Among most programs, preparation for conferences takes approximately one year. Some programs prepare assessment questions and draft a consensus statement prior to the consensus conference; other programs do not. Most programs assemble compilations of evidence and share this in advance some form with the panelists; in some instances, this involves providing systematic literature reviews with specific review instructions to panelists weeks in advance of the conference. Programs usually provide for speakers to present the evidence during the consensus conference. Most programs select panels of 9-18 members, including clinicians, scientists and analysts, and lay people, with varying attention to balancing panels for members' known positions on the assessment issues. In most instances, the conference is held over a two-to-three day period, although others have multiple meetings over longer periods of time. Programs generally provide for part or all of the conference to be held in a public forum. Some programs also conduct evaluations of their programs on such matters as impact of conference findings and panelist satisfaction with the process (Ferguson 2001; Thamer 1998).

In general, the advantages of consensus development processes are that they:

- Provide a focus for assembling experts on an assessment topic

- Provide a means for participation of lay people

- Are relatively inexpensive and less time-consuming compared to new primary data collection

- Provide a good way to call public attention to HTA

- Increase exposure of participants and the public to relevant evidence

- Can prompt face-to-face, evidence-based resolution of opposing viewpoints

- Can apply expert judgment in areas where data are insufficient or inconclusive

In general, the disadvantages of consensus development processes are that they:

- Do not generate new scientific evidence

- May appear to offer veracity to viewpoints that are not supported by evidence

- May over-emphasize or inhibit viewpoints depending upon the stature or personalities of the participants

- May be structured to force or give the appearance of group agreement when it does not exist

- Are difficult to validate

Various reports have made recommendations concerning how to strengthen consensus development programs in particular or in general (Goodman 1990; Institute of Medicine 1990; Olsen 1995). A synopsis of these recommendations is shown in Box 17.

Consensus development programs are not immune to the economic, political, and social forces that often serve as barriers or threats to evidence-based processes. Organizations that sponsor consensus development conferences may do so because they have certain expectations for the findings of these processes, and may find themselves at odds with evidence-based findings. Other stakeholders, including from industry, biomedical research institutions, health professions, patient groups, and politicians seeking to align themselves with certain groups, may seek to pressure consensus development panelists or even denounce a panel's findings in order to render desired results, the evidence notwithstanding. An infamous instance of such political reaction occurred in connection with the NIH Consensus Development Conference on Breast Cancer Screening for Women Ages 40 to 49, sponsored by the National Cancer Institute in 1997 (Fletcher 1997).

Strategies for Better Consensus Development Programs

- Programs, or their sponsoring organizations, should have the ability to disseminate and/or implement their consensus findings and recommendations.

- For each assessment, programs and/or panels should identify the intended audiences and means for achieving intended impact of consensus reports.

- Programs should describe their scope of interest and/or responsibility, including their purposes, topics, and technological properties or impacts of concerns for the program in general and for specific assessments.

- Programs should conduct assessments and provide reports in a timely fashion, including the timeliness of assessments relative to the selected topics and the timeframe for planning, conducting, and reporting of assessments.

- Programs should document the procedures and criteria for selecting conference topics and panel members.

- The topic and scope of each assessment should be specific and manageable, i.e., commensurate with the available evidence, time, and other resources.

- Panelists should represent the relevant health professions, methodologists such as epidemiologists and biostatisticians, economists, administrators, patient or other consumer representatives, and others who can provide relevant perspectives. Chairpersons should be recognized as objective with regard to consensus topics and skilled in group processes.

- Programs should compile the available evidence concerning the assessment topics, and provide a systematic compilation or synthesis of this to panelists prior to the conference.

- Programs should provide basic guidance concerning the interpretation of evidence, to help ensure that all panelists can be involved in this activity, regardless of their formal expertise in this area.

- The consensus development processes should be structured and documented, including, e.g., advance identification of key questions/issues, operational definition of consensus, systematically organized evidence, opportunity for equitable participation of panelists, and duration and spacing of sessions to facilitate panelists' full and alert participation.

- Consensus reports should include at least: description of the consensus process used, notations regarding the strength of agreement or assurance of the panel's findings, description of the reasoning used by the panel and the evidential basis for its findings, recommendations for research needed to address unresolved issues and otherwise advance understanding of the topic.

- Programs should monitor new developments that might justify reassessments.

- Programs should provide for periodic, independent evaluation of the program and its impacts.

Adapted from: Goodman 1990.

Previous Section Next Section Table of Contents NICHSR Home Page

Last reviewed: 23 January 2008

Last updated: 23 January 2008

First published: 19 August 2004

Metadata| Permanence level: Permanent: Stable Content