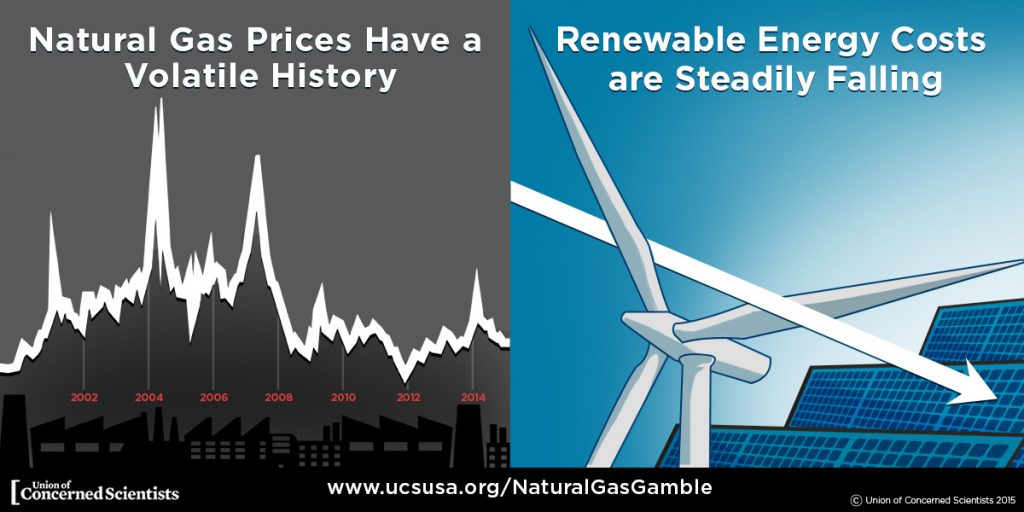

During the campaign, President-elect Trump promised to revive the coal industry. As others have reported, gutting EPA regulations designed to protect public health and the climate will have little impact in reviving the industry since the recent decline in burning coal to generate electricity is primarily due to low natural gas prices and cost reductions for wind and solar power. Ironically, rolling back regulations on the oil and natural gas industry would likely make it even more difficult for coal to compete economically.

Trump has also supported the development of so-called “clean coal” as a way to revive the industry. A key component to making coal cleaner is the process of capturing the carbon dioxide (CO2) emissions from burning coal in power plants or industrial facilities, and transporting and storing the CO2 underground so that it cannot add the to atmospheric build-up of carbon emissions that is driving climate change (a process otherwise known as carbon capture and storage, or CCS). While UCS supports CCS as a potential climate solution, we believe the term “clean coal” is an oxymoron because of other environmental and public health impacts of using coal across its fuel cycle.

CCS is not a new idea. The Obama Administration invested about $4.8 billion in CCS and the Bush Administration spent millions on R&D, tax credits and loan guarantees for CCS. Since the primary reason to do CCS is to reduce carbon emissions, one big question is will Trump support funding for CCS if he truly believes climate change is a hoax?

With the Petra Nova project in Texas beginning commercial operation on January 10, and the Kemper project in Mississippi currently scheduled to go online by January 31, coal with CCS technology has reached an important milestone.

Both projects received federal incentives from the U.S. Department of Energy (DOE) that were vitally important to their development. Below I discuss some key lessons learned from these projects, the implications for future projects if Rick Perry becomes DOE Secretary, and the longer-term outlook for CCS as a potential climate solution.

Petra Nova post-combustion CO2 capture project at an existing pulverized coal plant near Houston, TX. Source: NRG.

The tale of two CCS projects

The Petra Nova and Kemper projects have a few similarities and several differences that offer valuable lessons and insights for future CCS projects. Both projects will inject CO2 into depleted oil fields for enhanced oil recovery (EOR), sequestering the CO2 while increasing pressure at the aging field to produce more oil and help offset costs. However, the projects use different generation and capture technologies, burn different types of coal, and have considerably different costs (see Table 1).

Petra Nova is a 240 Megawatt (MW) post-combustion capture facility installed at an existing pulverized coal plant near Houston that’s designed to capture 90 percent of the total CO2 emissions. A joint venture between NRG and JX Nippon Oil & Gas Exploration, the plant uses low sulfur, sub-bituminous coal from the Power River Basin in Wyoming.

Petra Nova was completed on time and on-budget in 2 years for $1 billion. It will also receive up to $190 million in incentives from DOE’s Clean Coal Power Initiative. In addition, NRG and JX Nippon have equity stakes in the oil field where they will be doing EOR, allowing them to capture more value.

Kemper is a new 582 MW integrated gasification combined cycle (IGCC) power plant in Kemper County Mississippi that’s designed to capture 65 percent of the project’s CO2 emissions prior to combustion when it is still in a relatively concentrated and pressurized form. Owned by Mississippi Power (a subsidiary of Southern Company), the plant will use low-grade lignite coal from a mine next to the plant.

Kemper has been under construction for 6.5 years, is nearly 3 years behind schedule, and the capital cost has more than tripled from $2.2 billion to over $7 billion. It will receive up to $270 million from DOE’s Clean Coal Power Initiative.

One reason for Kemper’s high cost and delay is that they added CCS to a brand new coal IGCC power plant, whereas Petra Nova added CCS to an existing pulverized coal plant. Another reason cited by the New York Times is Southern Company’s mismanagement of Kemper, including allegations of drastically understating the project’s cost and timetable and intentionally hiding problems.

Southern Company’s December filing with the Securities and Exchange Commission (SEC) shows cost increases of $25-35 million per month from Kemper’s most recent delays. They also lost at least $250 million in tax benefits by not placing the plant into service by December 31, 2016. This is on top of $133 million in tax credits they had to repay the IRS for missing the original May 2014 in-service deadline. In November, they also increased the estimated first year non-fuel operations and maintenance expenses by $68 million.

Customers could pay up to $4.2 billion for Kemper under a cost cap set by the Mississippi Public Service Commission. Southern Company shareholders will absorb nearly $2.7 billion of the cost overruns.

Kemper is also having a negative impact on Fitch ratings for Southern Company. In contrast, Fitch ratings for Cooperative Energy (formerly the South Mississippi Electric Power Association) were recently upgraded from A- to A after bailing out of their 15 percent ownership stake in the project and moving from coal to natural gas and renewables.

Comparison of Kemper and Petra Nova Coal CCS Projects

Kemper

Petra Nova

Type of power plant

New integrated gasification combined cycle plant

Existing pulverized coal plant retrofit

Generation Capacity

582 MW

240 MW

Ownership

Mississippi Power and KBR

NRG and JX Nippon Oil & Gas Exploration

Power Plant Location

Kemper County, Mississippi

Near Houston, TX

Type of coal

Mississippi Lignite

WY Powder River Basin sub-bituminous, low sulfur

CO2 Capture Rate

65%

90%

CO2 Capture Volume

3.5 million tons/yr

1.6 million tons/yr

CO2 Capture Type

Pre-combustion

Post-combustion

CO2 Capture Method

Absorption physical solvent-based process (Selexol)

Absorption chemical solvent-based process (Amine)

CO2 Storage

Enhanced Oil Recovery

Enhanced Oil Recovery

CO2 Transportation

61 mile pipeline to storage site

82 mile pipeline to storage site

Construction Period

6.5 years

2 years

Schedule Delay

3 years

No delay

Original Capital Cost

$2.2 billion ($3,780/kW)

$1 billion ($4,167/kW)

Final Capital Cost

$7 billion ($12,027/kW)

$1 billion ($4,167/kW)

DOE Incentives

$270 million

$190 million

Sources: MIT, Global CCS Institute, NRG, Kemper project site.

How many CCS demonstration projects are needed?

In 2008, a UCS report titled Coal Power in a Warming World called for building 5-10 full-scale integrated CCS demonstrations projects at coal-fired plants in the U.S. to test different generation and capture technologies and storing CO2 in different sequestration sites. Our recommendations were consistent with recommendations from MIT’s 2007 Future of Coal report and a 2007 report by the Pew Center on Global Climate Change. In 2009, former DOE Secretary Steven Chu set a goal for the U.S. to have 10 CCS demonstration projects in-service by 2016. In 2010, the White House Interagency Carbon Capture and Storage Task Force also recommended bringing 5 to 10 commercial demonstration projects online by 2016.

Unfortunately, the U.S. is lagging behind these targets. While there are 8 large scale CCS projects currently operating in the U.S., Petra Nova is the only large scale CCS project operating at a power plant, according to the Global CCS Institute. Besides Kemper, the only other power plant project listed under development is the Texas Clean Energy Project, which recently lost its DOE funding because of escalating costs and missed deadlines (see more below). The high profile FutureGen project in Illinois was also cancelled after DOE discontinued funding in 2015 because of cost increases and construction delays.

The 110 MW Boundary Dam project in Canada is the only other large-scale power plant coal with CCS project currently operating outside of the U.S. After encountering several problems during its first year of operation that reduced the capture rate from 90 percent to 40 percent, the project performed much better in 2016. Before declaring success at Petra Nova and Kemper, these projects will likely also have to go through a similar teething process to work out any bugs in the technology.

Eight more power plant CCS projects are in different stages of development in China, South Korea, the United Kingdom, and the Netherlands.

Rick Perry’s support for coal

Under the Obama Administration, the DOE’s Office of Fossil Energy and the National Technology Laboratory (NETL) have administered a robust Carbon Capture R&D program. The primary goal of this program is to lower the cost and energy penalty of second generation CCS technologies, resulting in a captured cost of CO2 less than $40/tonne in the 2020-2025 timeframe. Given Perry’s record of supporting coal as Governor of Texas, there’s a good chance he would support continued R&D funding for coal with CCS projects if he becomes DOE Secretary. Here are a few examples:

- In 2005, Perry issued a controversial executive order to fast-track the permitting process for 11 coal plants (without CCS) proposed by TXU, now called Energy Futures Holdings. UCS and other groups strongly opposed this coal build-out, which would have been disastrous for the climate. Only 3 of the plants were ultimately built.

- In 2002, he supported setting-up a clean coal technology council in Texas.

- In 2009, he signed a bill with tax incentives for clean coal to support projects like the Texas Clean Energy Project, a 400 MW coal gasification with CCS project in West Texas proposed by Summit Power Group. After missing several key deadlines and with the cost nearly doubling to $4 billion, the DOE discontinued funding in May 2016 (after spending $167 million) and asked Congress to reprogram $240 million of the incentives to other R&D efforts. Summit Power said this move would basically kill the project.

The high cost of coal with CCS

CCS advocates often dismiss the high costs of recent projects, arguing that this is expected for first-of-a-kind projects. They claim costs should come down over time through learning, pointing to other technologies like wind and solar as examples.

While it is reasonable to expect that CCS costs will come down, the question is how much and over what time period? Like nuclear power plants, CCS projects tend to be very large, long-lived construction projects that use a lot of concrete and steel, and equipment that is unlikely to be mass-produced in the way more modular technologies like wind turbines and solar panels are manufactured and installed over a much shorter period of time.

Several recent studies project the cost of coal with CCS to be much higher than many other low and zero carbon technologies. For example, the Energy Information Administration’s (EIA) projections from Annual Energy Outlook 2016 show costs for coal with CCS plants in 2022 that are 2-3 times higher than the cost of new onshore wind, utility scale solar, geothermal, and hydropower projects, not including tax incentives (see Table 2 on p. 8). The costs for biopower, advanced nuclear plants, and natural gas combined cycle (NGCC) plants with CCS are also somewhat lower. While EIA projects the costs for coal with CCS plants to decline ~10 percent by 2040, they project the costs for other low carbon technologies to fall by similar or even greater amounts.

Source: EIA, AEO 2016.

EIA’s cost projections are consistent with other sources including NREL’s 2016 Annual Technology Baseline (ATB) report and Lazard’s most recent levelized cost of energy analysis. They all show that adding CCS to natural gas power plants could be much more economic than coal.

Other studies show CCS applications at industrial facilities could also be less expensive. Industrial applications of CCS could also be more apt—for example in industries such as Iron and Steel and Cement production—where alternative low carbon, affordable technologies don’t exist. In contrast, the power sector has many technologies available today that can generate electricity without carbon emissions.

Role of CCS in addressing climate change

Many experts believe that reaching net zero carbon emissions by mid-century, in line with global climate goals, will likely require some form of CCS, along with nuclear power and a massive ramp-up of renewable energy and energy efficiency. Given the high cost of coal with CCS compared to other alternatives, it’s not surprising that recent studies show it playing a relatively modest role in addressing climate change. However, some studies analyzing the impacts of reducing power sector CO2 emissions 80-90 percent by 2050 show that natural gas or even biopower with CCS could make a more meaningful contribution after 2040. For example:

- A 2016 UCS study showed natural gas with CCS could provide 9-28 percent of U.S. electricity by 2050 under range of deep decarbonization scenarios for the power sector, but no coal with CCS (see Figure 5 below). Natural gas with CCS provided 16 percent of U.S. electricity by 2050 under a Mid-Cost Case and 28 percent under an Optimistic CCS Cost Case.

- The November 2016 U.S. Mid-Century Strategy for Deep Decarbonization study released by the Obama Administration at the international climate negotiations in Marrakech found that fossil fuels with CCS would provide 20 percent of U.S. electricity generation by 2050 under their “Benchmark” scenario. While natural gas with CCS made the biggest contribution, both coal and bioenergy with CCS also played a role.

- The 2014 Pathways to Deep Decarbonization in the United States report found that gas with CCS would provide nearly 13 percent of U.S. electricity under their “Mixed” case. They also modeled a High CCS case that “seeks to preserve a status quo energy mix,” in which they assumed CCS would provide 55 percent of U.S. electricity by 2050, split between coal and gas. However, this case also had the highest electricity prices.

- DOE’s 2017 Quadrennial Energy Review found that under a scenario combining tax incentives with successful federal R&D, coal and gas with CCS could provide 5-7 percent of total U.S. generation in 2040. The scenario assumed a refundable sequestration tax credit of $10/metric ton of CO2 for EOR storage, $50/metric ton of CO2 for saline storage, and a refundable 30 percent investment tax credit for CCS equipment and infrastructure.

Source: UCS, The U.S. Power Sector in a Net Zero World, 2016.

Policy implications

Clearly, more DOE-funded R&D is needed to leverage additional private sector investment and demonstrate coal with CCS on a commercial scale using different technologies and at different sequestration sites, like deep saline aquifers. R&D efforts should also be expanded beyond coal to include natural gas and bioenergy power plants, as well as industrial facilities. We also need to develop a strong regulatory and oversight system to ensure that captured CO2 remains permanently sequestered.

Recent policy proposals to increase tax incentives for CCS could also help improve the economic viability of CCS. A price on carbon and higher oil prices for projects using EOR could also make a big difference, but the likelihood of either happening under the Trump Administration is slim. Given the high cost, long lead time, and limited near-term role of CCS as a climate solution, federal efforts should prioritize R&D and deployment incentives on more cost-effective low carbon alternatives like renewable energy and energy efficiency. While studies show coal with CCS could play a modest role in addressing climate change by 2050, it’s unlikely to be enough over the next four years to fulfill Trump’s promises to revive the coal industry.

In 2009, the EPA made a long overdue, and wholly unremarkable

In 2009, the EPA made a long overdue, and wholly unremarkable