§3.1 Arithmetics and Error Measures

Contents

- §3.1(i) Floating-Point Arithmetic

- §3.1(ii) Interval Arithmetic

- §3.1(iii) Rational Arithmetics

- §3.1(iv) Level-Index Arithmetic

- §3.1(v) Error Measures

§3.1(i) Floating-Point Arithmetic

Computer arithmetic is described for the binary based system with base 2; another frequently used system is the hexadecimal system with base 16.

A nonzero normalized binary floating-point machine number ![]() is

represented as

is

represented as

where ![]() is equal to 1 or 0, each

is equal to 1 or 0, each ![]() ,

, ![]() , is either 0 or 1,

, is either 0 or 1,

![]() is the most significant bit,

is the most significant bit, ![]() (

(![]() ) is the number of

significant bits

) is the number of

significant bits ![]() ,

, ![]() is the least significant bit,

is the least significant bit, ![]() is

an integer called the exponent,

is

an integer called the exponent, ![]() is the

significand, and

is the

significand, and ![]() is the fractional

part.

is the fractional

part.

The set of machine numbers ![]() is the union of 0 and

the set

is the union of 0 and

the set

with ![]() and all allowable choices of

and all allowable choices of ![]() ,

, ![]() ,

, ![]() , and

, and ![]() .

.

Let ![]() with

with ![]() and

and

![]() . For given values of

. For given values of ![]() ,

, ![]() , and

, and

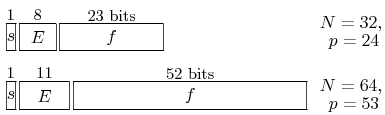

![]() , the format width in bits

, the format width in bits ![]() of a computer word is the total number

of bits:

the sign (one bit), the significant bits

of a computer word is the total number

of bits:

the sign (one bit), the significant bits ![]() (

(![]() bits), and the bits allocated to the exponent (the remaining

bits), and the bits allocated to the exponent (the remaining ![]() bits). The

integers

bits). The

integers ![]() ,

, ![]() , and

, and ![]() are characteristics of the

machine. The machine epsilon

are characteristics of the

machine. The machine epsilon ![]() , that is, the distance

between 1 and the next larger machine number with

, that is, the distance

between 1 and the next larger machine number with ![]() is given by

is given by

![]() . The machine precision is

. The machine precision is

![]() . The lower and upper bounds for the absolute

values of the nonzero machine numbers are given by

. The lower and upper bounds for the absolute

values of the nonzero machine numbers are given by

Underflow (overflow) after computing ![]() occurs when

occurs when ![]() is

smaller (larger) than

is

smaller (larger) than ![]() (

(![]() ).

).

¶ IEEE Standard

¶ Rounding

Let ![]() be any positive number with

be any positive number with

![]() , and

, and

Then rounding by chopping or rounding down of ![]() gives

gives ![]() ,

with maximum relative error

,

with maximum relative error ![]() . Symmetric rounding or

rounding to nearest of

. Symmetric rounding or

rounding to nearest of ![]() gives

gives ![]() or

or ![]() , whichever is nearer

to

, whichever is nearer

to ![]() , with maximum relative error equal to the machine precision

, with maximum relative error equal to the machine precision

![]() .

.

Negative numbers ![]() are rounded in the same way as

are rounded in the same way as ![]() .

.

§3.1(ii) Interval Arithmetic

Interval arithmetic is intended for bounding the total effect of rounding errors of calculations with machine numbers. With this arithmetic the computed result can be proved to lie in a certain interval, which leads to validated computing with guaranteed and rigorous inclusion regions for the results.

Let ![]() be the set of closed intervals

be the set of closed intervals ![]() . The elementary arithmetical

operations on intervals are defined as follows:

. The elementary arithmetical

operations on intervals are defined as follows:

where ![]() , with appropriate roundings of the end points of

, with appropriate roundings of the end points of

![]() when machine numbers are being used. Division is possible only if the

divisor interval does not contain zero.

when machine numbers are being used. Division is possible only if the

divisor interval does not contain zero.

§3.1(iii) Rational Arithmetics

Computer algebra systems use exact rational arithmetic with rational

numbers ![]() , where

, where ![]() and

and ![]() are multi-length integers. During the

calculations common divisors are removed from the rational numbers, and the

final results can be converted to decimal representations of arbitrary length.

For further information see Matula and Kornerup (1980).

are multi-length integers. During the

calculations common divisors are removed from the rational numbers, and the

final results can be converted to decimal representations of arbitrary length.

For further information see Matula and Kornerup (1980).

§3.1(iv) Level-Index Arithmetic

To eliminate overflow or underflow in finite-precision arithmetic numbers are

represented by using generalized logarithms ![]() given by

given by

with ![]() and

and ![]() the unique nonnegative integer such that

the unique nonnegative integer such that

![]() . In level-index arithmetic

. In level-index arithmetic ![]() is

represented by

is

represented by ![]() (or

(or ![]() for negative numbers).

Also in this arithmetic generalized precision can be defined, which includes

absolute error and relative precision (§3.1(v)) as special cases.

for negative numbers).

Also in this arithmetic generalized precision can be defined, which includes

absolute error and relative precision (§3.1(v)) as special cases.

§3.1(v) Error Measures

If ![]() is an approximation to a real or complex number

is an approximation to a real or complex number ![]() , then the

absolute error is

, then the

absolute error is

If ![]() , the relative error is

, the relative error is

The relative precision is

where ![]() for real variables, and

for real variables, and ![]() for complex

variables (with the principal value of the logarithm).

for complex

variables (with the principal value of the logarithm).

The mollified error is

For error measures for complex arithmetic see Olver (1983).