Smoky Mountain Conference Gathers Global HPC Experts

Tags: HPC, Jaguar, Smoky Mountain Conference

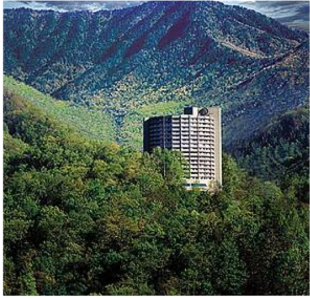

High-performance computing (HPC) experts from across the world gathered in Gatlinburg, Tennessee, September 5-7 to discuss the current state of supercomputing and the upcoming transition from petascale to exascale computing.

Simulation science for complex engineered systems was the theme of the three-day Smoky Mountain Computational Sciences and Engineering Conference. OLCF Project Director Buddy Bland, a member of the conference program committee, spoke on day one about the upcoming arrival of Titan—ORNL’s new 20 petaflop mega machine—and about the necessity for supercomputing.

“These are really the major systems in the world today,” Bland said. “We want to help focus the whole computational science community on what these big computers do and how they’re being used.”

Representatives from Lawrence Livermore National Laboratory in California spoke about that facility’s Sequoia—currently the world’s fastest supercomputer. Representatives from Texas Advanced Computer Center, located at the University of Texas, expressed excitement about their soon-to-arrive Intel Xeon Phi-based supercomputer, Stampede. In addition, Satoshi Matsuoka from the Tokyo Institute of Technology revealed how the institute has been getting higher performance from its supercomputer TSUBAME 2.0 by incorporating graphical processing units into the architecture.

Day two saw speakers discussing a variety of simulation applications now in use. OLCF Director of Science Jack Wells started the day off leading a panel discussion about accelerated science and big data in contemporary HPC. Other topics included computerized simulations for seismology, drug discovery, and light-water reactors.

On the final day, industry experts Peter Ungaro, Alan Gara, David Turek, and William Harrod, from Cray, Intel IBM, and the Department of Energy, respectively, discussed what has been working and what could work better going forward into the exascale era.

“Rarely do we get these many high-level people on the same stage at the same time,” said Bland.

Bland said exascale computing in the United States is essential in order to stay globally competitive. Jaguar, he pointed out, has had tremendous accomplishments in many notable fields of science.

The supercomputer has successfully developed virtual simulations for climate change that can peer 100-200 years into the future, and has also created simulations for development of the international ITER fusion energy project. Last but not least, Jaguar has made vast contributions to sustainability by creating modeling and simulation codes for plant material that can be converted to biofuels.

As supercomputers continue to make triumphs in science and with the expectations of exascale machines right around the corner, Bland says that it is, and will be, “a huge win for the US and for our economy.”—by Jeremy Rumsey