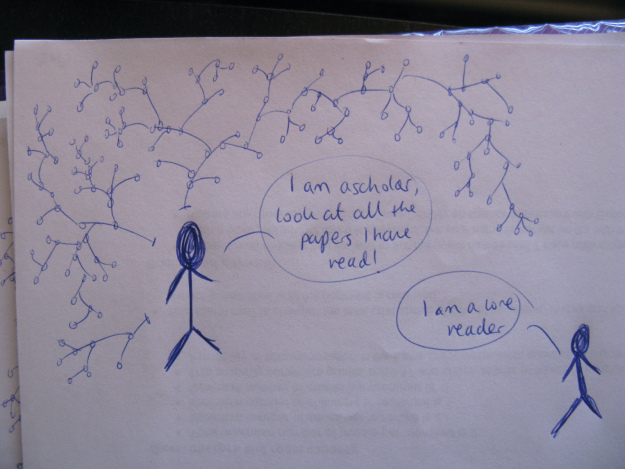

Picture: social researcher number one.

Picture: social researcher number one.

This is a drawing of a social researcher. I don’t mean a researcher who studies social relations. I mean this is a researcher who is social; one that’s connects to other people, very simply by citing other researchers.

(Yes, sociology-spotters, it’s ‘inspired’ by Bruno Latour. It’s a poor reinterpretation of an early diagram in Science in Action. I’m currently an ocean away from my desk, and don’t have the specific reference to hand).

A few months ago, I attended the launch of the Royal Society’s survey of the global scientific landscape, a report entitled Knowledge, Networks and Nations. Looking at all the Royal Society’s pretty pictures of international networks, I remember be struck by quite how much of a social enterprise science is, and that in many respects this is its great strength. The idea that science might be socially constructed is often taken as a criticism of science, an attempt at undermining it even. But it doesn’t have to be.

(This isn’t to deny science’s interaction with the natural world. Indeed, I’ve often thought many concerns over social constructivism are down to a confusion between science and nature. But that’s a larger philosophical debate/ bunfight, possibly also involving Latourian diagrams scrawled on bits of scrap paper).

I was reminded of this sense of the sociality of science during all the recent blather about ‘impact’. It is jargon, and rather ill-defined at that. As Richard Jones neatly put it, this thing called impact isn’t an actual thing at all, but rather a word that’s been adopted to stand for a number of overlapping imperatives. To put it as plainly as possible, publishing a research paper is only half the job (credit: I stole that line from David Dobbs). The government wants to make sure that the researchers they fund do a full job, even though they are aware that the other half of this work might take a range of forms, so they’re trying to find ways of measuring a thing called impact. This is hard. We could count citations in academic literature, or patent applications, or measure column inches of mass-media coverage. I suppose we could count mentions of Brian Cox on twitter too. I don’t think any of these are quite going to cut it, but that doesn’t mean we can’t be cleverer about how we do try to discern impact. As Steve Fuller recently argued, there is no reason why metrics have to be stupid. A recent AHRC report (pdf) covered many of these issues too.

Back to the social researcher thing. Here’s another picture. The first was supposed to show the social relations involved in making academic work. This one is of the social relations involved in sharing it too, and is as influenced by Lewenstein (via Gregory) as it is Latour.

Picture: social researcher number two.

Picture: social researcher number two.

We might take first diagram as a critique of the rhetoric of a scientific paper; a way of showing off expertise to keep others out. Equally though, it is simply representative of the ways in which people with academic training draw on a body of other peoples’ work and are helped in their thinking (and give credit with a traceable trial for that thinking). I’m an academic. I have have read a lot and like to reference things. There are 533 sources cited in my PhD (I know because, after I submitted the thing, I ran a ‘guess the weight of my bibliography’ contest on my knitblog). I’ve also learned loads over the years from my students, friends, teachers, colleagues, family, ex-boyfriends, blog commentators, etc. I am a mass of other peoples’ ideas, even if I choose between them and add my own perceptions, misunderstandings and connections. It’s standing on the shoulders of giants stuff, or a matter of science as a team sport.

(The first of those analogies comes via a folk history of Newton, the latter one I’ve taken from Jack Stilgoe. Just as I’ve already drawn on Latour, Dobbs, Jones, Fuller, Gregory… see what I mean?).

This is really important when it comes to thinking about impact. James Sumner wrote a great post earlier this year where he stressed how much time he spent talking about other peoples’ research. Sumner meant this in terms of the specific issues of humanities academics doing public engagement, but I think it applies much more broadly. As Jack Stilgoe wrote earlier this week, innovation studies tell us that economic benefits comes from networking and policy making is similarly built on networks of trust.

So, when Stilgoe also says we need to rethink impact as ‘people, not papers’, I feel the same unease I have about calls to fund ‘people, not projects‘: science is done by groups, not individuals. It’s the tomb of the unknown warrior, to steal another good line, this time from Martin Rees (see second quote here). I guess if we want some tidy alliteration, it’s about keeping our scientists social. Let’s explode the idea of impact, not just to think of it as something more than in an economic or academic sense, but as something accrued, done and most successfully achieved through networks. I don’t mean networks in the Machiavellian sense sometimes associated with Latour, but simply in terms of people helping each other out. I want to sit in the Royal Society looking at pretty pictures that the networked journey of research, not just its networked production (or better, the ways networks or production and dissemination are and can be interlaced).

As ever, the comment thread is open for your thoughts. Or, if you’re London based and want to be sociable about the impact debate, do come along to our event at Imperial on the 5th.

Pingback: KABOOM: Exploding ‘impact’ by Through The Looking Glass | Engaging Talk

Alice, thanks for this very thoughtful post, which together with recent contributions from Jack Stilgoe, Richard Jones and others, highlight some unresolved questions in the way ‘impact’ will be used in the REF and elsewhere. You’re quite right to draw attention to the social, networked nature of many types of impact. And this fits of course with much recent analysis of how innovation occurs, from thinkers like Charlie Leadbeater, Clay Shirky, Steven Johnson and Henry Chesbrough.

Interpreted crudely, there is clearly a danger that the REF will obscure or downplay the social/networked dimensions of impact, in favour of a narrow, linear focus on the results of particular papers. But having read several of the impact case studies that HEFCE has published, I’m somewhat reassured. Within the examples they’ve published (see e.g. the social work or earth systems panels: http://www.hefce.ac.uk/research/ref/impact/) are some pretty sophisticated accounts of what impact is, and the myriad ways in which it can occur.

So I think there’s still a lot to play for over the next year to ensure that practical, worked-up examples of impact in all its diverse forms are documented and incorporated within the REF framework, and the remit of the individual panels. I hope the Royal Society can work with HEFCE and others to keep this debate going, and move beyond the sterile ‘impact: good or bad?’ debate that continues to occupy more airtime than it deserves.

I think you’re right to the point of the case studies – I think a lot of the negative reaction to this comes from the *idea* of impact metrics rather than how they are being worked out.

Pingback: KABOOM: Exploding ‘impact’

Pingback: The cult of personality in science « Testing hypotheses…

Pingback: Is Public Engagement too institutionalised? « through the looking glass

Pingback: Times Higher Education – Wider open spaces | csid