HEFCE have announced they are arranging an independent review of the role of metrics in research assessment and management. The Impact blog welcomes this review and will look to encourage wider discussion and debate on how research is currently assessed and how it could be in years to come. Over the last two years we’ve featured a number of posts relating to alternative metrics (altmetrics), statistical methods for assessing research quality and the measurement of broader impacts of scholarly work. Sierra Williams has put together a reading list of our most popular pieces on the variety of topics to be investigated further.

HEFCE have announced they are arranging an independent review of the role of metrics in research assessment and management. The Impact blog welcomes this review and will look to encourage wider discussion and debate on how research is currently assessed and how it could be in years to come. Over the last two years we’ve featured a number of posts relating to alternative metrics (altmetrics), statistical methods for assessing research quality and the measurement of broader impacts of scholarly work. Sierra Williams has put together a reading list of our most popular pieces on the variety of topics to be investigated further.

The Research Excellence Framework (REF) and alternative methods of research assessment and funding

Departmental H-Index is a more transparent, fair and cost-effective method for distributing funding to universities.

Departmental H-Index is a more transparent, fair and cost-effective method for distributing funding to universities.

There is growing concern that the contentious journal impact factor is being used by universities as a proxy measure for research assessment. In light of this and the wider REF2014 exercise, Dorothy Bishop believes we need a better system for distributing funding to universities than the REF approach allows. A bibliometric measure such as a departmental H-index to rank departments would be a better suited and more practical solution.

Put your money where your citations are: a proposal for a new funding system

Put your money where your citations are: a proposal for a new funding system

What would happen if researchers were given more control over their own funding and the funding of others? Hadas Shema looks at the results from an article that makes the case for a collective approach to the allocation of science funding. By funding people directly rather than projects, money and time would be saved and researchers would be given more freedom than the current system.

The apparatus of research assessment is driven by the academic publishing industry and has become entirely self-serving

The apparatus of research assessment is driven by the academic publishing industry and has become entirely self-serving

Peer review may be favoured as the best measure of scientific assessment ahead of the REF, but can it be properly implemented? Peter Coles does the maths on what the Physics panel face and finds there simply won’t be enough time to do what the REF administrators claim. Rather, closed-access bibliometrics will have to be substituted at the expense of legitimate assessment of outputs.

Moneyball for Academics: network analysis methods for predicting the future success of papers and researchers.

Moneyball for Academics: network analysis methods for predicting the future success of papers and researchers.

Drawing from a combination of network analysis measurements, Erik Brynjolfsson and Shachar Reichman present methods from their research on predicting the future success of researchers. The overall vision for this project is to create an academic dashboard that will include a suite of measures and prediction methods that could supplement the current subjective tools used in decision-making processes in academia.

Will the REF disadvantage interdisciplinary research? The inadvertent effects of journal rankings

Will the REF disadvantage interdisciplinary research? The inadvertent effects of journal rankings

A failure to engage in interdisciplinary work risks creating intellectual inbreeding and could push research away from socially complex issues. Ismael Rafols asks why there is a bias against interdisciplinary research, and why the REF will work to suppress an otherwise useful body of research.

How useful are metrics in determining research impact?

Impact factors declared unfit for duty

Last week the San Francisco Declaration on Research Assessment was published. This document aims to address the research community’s problems with evaluating individual outputs, a welcome announcement for those concerned with the mis-use of journal impact factors. Stephen Curry commends the Declaration’s recommendations, but also highlights some remaining difficulties in refusing to participate in an institutional culture still beholden to the impact factor.

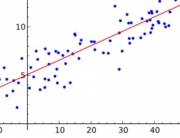

Twitter and traditional bibliometrics are separate but complementary aspects of research impact.

Twitter and traditional bibliometrics are separate but complementary aspects of research impact.

In a recent study, Haustein and colleagues found a weak correlation between the number of times a paper is tweeted about and subsequent citations. But the study also found papers from 2012 were tweeted about ten times more than papers from 2010. Emily Darling discusses the results and finds that while altmetrics may do a poor job at predicting the traditional success of scholarly articles, it is becoming increasingly apparent that social media can contribute to both scientific and social outcomes.

Four reasons to stop caring so much about the h-index.

Four reasons to stop caring so much about the h-index.

The h-index attempts to measure the productivity and impact of the published work of scholar. But reducing scholarly work to a number in this way has significant limitations. Stacy Konkiel highlights four specific reasons the h-index fails to capture a complete picture of research impact. Furthermore, there are a variety of new altmetrics tools out there focusing on how to measure the influence of all of a researcher’s outputs, not just their papers.

The research impact agenda must translate measurement into learning

The research impact agenda must translate measurement into learning

Funders and the wider research community must avoid the temptation to reduce impact to just things that can be measured, says Liz Allen of the Wellcome Trust. Measurement should not be for measuring’s sake; it must be about contributing to learning. Qualitative descriptors of progress and impact alongside quantitative measurements are essential in order to evaluate whether the research is actually making a difference.

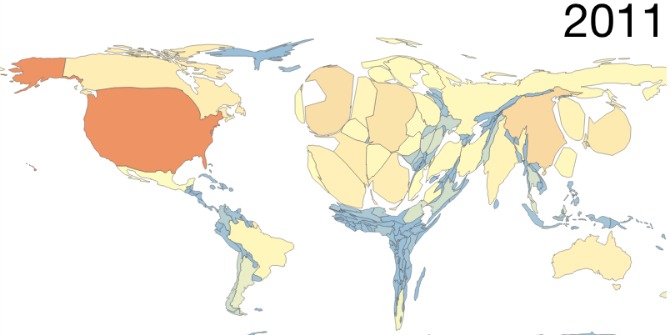

Altmetrics could enable scholarship from developing countries to receive due recognition.

Altmetrics could enable scholarship from developing countries to receive due recognition.

The Web of Science and its corresponding Journal Impact Factor are inadequate for an understanding of the impact of scholarly work from developing regions, argues Juan Pablo Alperin. Alternative metrics offer the opportunity to redirect incentive structures towards problems that contribute to development, or at least to local priorities. But the altmetrics community needs to actively engage with scholars from developing regions to ensure the new metrics do not continue to cater to well-known and well-established networks.

The academic quantified self: the role of data in building an academic professional sense of self.

The academic quantified self: the role of data in building an academic professional sense of self.

With a vast array of performance and output measurements readily available on universities and individual academics, Deborah Lupton explores the parallels between the audit culture in academia and the quantified self movement. Quantified selfers can find great satisfaction in using data to take control over elements of their lives. But it is important for researchers to remain critically alert to both the pleasures and the privations of academic self-quantification.

Bibliometrics, Altmetrics, and Beyond: Tools for academics and universities to monitor their own impact.

Universities can improve academic services through wider recognition of altmetrics and alt-products.

Universities can improve academic services through wider recognition of altmetrics and alt-products.

As altmetrics gain traction across the scholarly community, publishers and academic institutions are seeking to develop standards to encourage wider adoption. Carly Strasser provides an overview of why altmetrics are here to stay and how universities might begin to incorporate altmetrics into their own services. While this process might take some time, institutions can begin by encouraging their researchers to recognize the importance of all of their scholarly work (datasets, software, etc).

The launch of ImpactStory: using altmetrics to tell data-driven stories

The launch of ImpactStory: using altmetrics to tell data-driven stories

By providing real-time information, altmetrics are shifting how research impact is understood. Jason Priem and Heather Piwowar outline the launch of ImpactStory, a new webapp aiming to provide a broader picture of impact to help scholars understand more about the audience and reach of their research.

Why every researcher should sign up for their ORCID ID

Why every researcher should sign up for their ORCID ID

The Open Researcher and Contributor Identifier, or ORCID, is a non-profit effort providing digital identifiers to the research community to ensure correct authorship data is available and more transparent. Brian Kelly welcomes the widespread adoption of the unique ORCID ID arguing that it should be a particular priority for researchers whose position in their host institution is uncertain: which is to say, everyone.

How to use Harzing’s ‘Publish or Perish’ software to assess citations – a step-by-step guide

How to use Harzing’s ‘Publish or Perish’ software to assess citations – a step-by-step guide

In his recent blog post on the need for a digital census of academic research, Patrick Dunleavy argued that the ‘Publish or Perish’ software, developed by Professor Anne-Wil Harzing of Melbourne University and based on Google Scholar data, could provide an exceptionally easy way for academics to record details of their publications and citation instances. An academic with a reasonably distinctive name should be able to compile this report in less than half an hour. Here we present a simple ‘how-to’ guide to using the software.

The new metrics cannot be ignored – we need to implement centralised impact management systems to understand what these numbers mean

The new metrics cannot be ignored – we need to implement centralised impact management systems to understand what these numbers mean

By using the social web to convey both scholarly and public attention of research outputs, altmetrics offer a much richer picture than traditional metrics based on exclusive citation database information. Pat Loria compares the new metrics services and argues that as more systems incorporate altmetrics into their platforms, institutions will benefit from creating an impact management system to interpret these metrics, pulling in information from research managers, ICT and systems staff, and those creating the research impact.

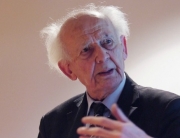

Further Information on the Independent review of the role of metrics in research assessment:

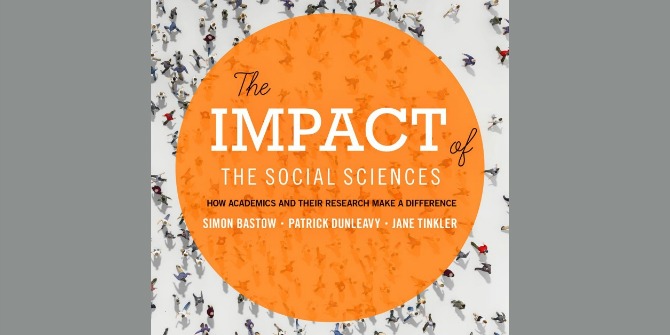

James Wilsdon, Professor of Science and Democracy at the Science Policy Research Unit (SPRU), University of Sussex and also Chair of the Campaign for Social Science will be chairing the review’s steering group comprised of the UK’s leading experts on metrics, review and scientific assessment. Jane Tinkler from the LSE and co-author of The Impact of the Social Sciences: How Academics and Their Research Make a Difference is on the steering group along with many other Impact blog contributors. For further information about the review, contact Alex Herbert or Kate Turton (metrics@hefce.ac.uk).

[…] of the Social Sciences, is also member and has put together some reading material on their blog http://blogs.lse.ac.uk/impactofsocialsciences/2014/04/03/reading-list-for-hefcemetrics/. So there will be ample input from the social sciences to analyze both the promises and the […]