Overview: Nature's peer review trial

Nature (2006) | doi:10.1038/nature05535

Despite enthusiasm for the concept, open peer review was not widely popular, either among authors or by scientists invited to comment.

December 2006

On 1 June this year, Nature launched a trial of open peer review. The intention was to explore the interest of researchers in a particular model of open peer review, whether as authors or as reviewers. It was also intended to provide Nature's editors and publishers with a test of the practicalities of a potential extension to the traditional procedures of peer review.

Several times during the exercise, researchers and journalists asked us whether the trial reflected a sense of dissatisfaction or concern about our long-standing procedure. On the contrary, we believe that this process works as well as any system of peer review can. Furthermore, in our occasional surveys of authors we receive strong signals of satisfaction: in the most recent survey, 74% agreed with the statement that their paper had been improved by the process, 20% felt neutral, while 6% disagreed.

Nevertheless, peer review is never perfect and we need to keep it subjected to scrutiny as community expectations and new opportunities evolve. In particular, we felt that it was time to explore a more participative approach.

The process

Nature receives approximately 10,000 papers every year and our editors reject about 60% of them without review. (Since the journal's launch in 1869, Nature's editors have been the only arbiters of what it publishes.) The papers that survive beyond that initial threshold of editorial interest are submitted to our traditional process of assessment, in which two or more referees chosen by the editors are asked to comment anonymously and confidentially. Editors then consider the comments and proceed with rejection, encouragement or acceptance. In the end we publish about 7% of our submissions.

A survey of authors conducted ahead of the open-peer-review trial indicated a sufficient level of interest to justify it. Accordingly, between 1 June and 30 September 2006, we invited authors of newly submitted papers that survived the initial editorial assessment to have them hosted on an open server on the Internet for public comment. For those who agreed, we simultaneously subjected their papers to standard peer review. We checked all comments received for open display for potential legal problems or inappropriate language, and in the event none was held back. All comments were required to be signed. Once the standard process was complete (that is, once all solicited referees' comments had been received), we also gathered the comments received on the server, and removed the paper.

At the start of the trial and several times throughout, we sent e-mail alerts to all registrants on nature.com and to any interested readers, who could sign up to receive regular updates. On several occasions, editors contacted groups of scientists in a particular discipline who they thought might be interested to review or comment on specific papers. The trial was constantly highlighted on the Nature website's home page. Thus substantive efforts were made to bring it to the attention of potential contributors to the open review process.

Following this four-month period of the trial, during October, we ran several surveys to collect feedback as the final papers went through the combination of open and solicited peer review.

Outcomes

We sent out a total of 1,369 papers for review during the trial period. The authors of 71 (or 5%) of these agreed to their papers being displayed for open comment. Of the displayed papers, 33 received no comments, while 38 (54%) received a total of 92 technical comments. Of these comments, 49 were to 8 papers. The remaining 30 papers had comments evenly distributed. The most commented-on paper received 10 comments (an evolution paper about post-mating sexual selection). There is no obvious time bias: the papers receiving most comments were evenly spread throughout the trial, and recent papers did not show any waning of interest.

The trial received a healthy volume of online traffic: an average of 5,600 html page views per week and about the same for RSS feeds. However, this reader interest did not convert into significant numbers of comments.

Distribution by subject area

We categorized papers within 15 subject areas. The numbers of papers on the open server in each category were small. Thus any extrapolation from these numbers is uncertain.

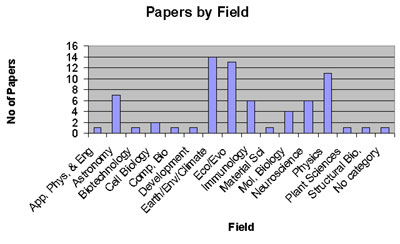

The distribution of papers posted is shown in Figure 1. Most were from the fields of Earth/environment/climate science and ecology/evolution, with 14 and 13 papers, respectively, closely followed by physics with 11. Astronomy, immunology and neuroscience made up the bulk of the rest.

Figure 1: Papers by field

As predicted, there were fewer papers in most cellular and molecular fields, although arguably those that were open received as many comments as those in other disciplines. No papers were posted in biochemistry, chemical biology, chemistry, genetics/genomics, medical research, microbiology, palaeontology or zoology.

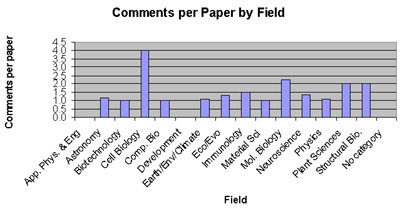

Figure 2 shows the distribution of average number of comments received per paper. Ten subject areas received an average of more than one comment per paper: astronomy, cell biology, Earth/environment/climate, ecology/evolution, immunology, molecular biology, neuroscience, physics, plant sciences and structural biology. All of these fields featured one or two heavily commented-on papers. But it should once again be borne in mind that the absolute numbers of papers are small.

Figure 2: Comments per paper by field

Editorial feedback

Each comment received was rated by the handling editor at the time of each paper's decision, according to the following scale:

- Actively unhelpful

- Reasonable comments, but no useful information

- Valid minor points and/or details

- Major points in line with solicited reviewers' comments

- Directly influenced publication over and above reviewers' comments

Each comment was given two such ratings: one for technical value and one for editorial value (that is, for comments regarding context and significance).

No editor judged the comments on their paper to be more than 4 in either regard, and only four comments received 4. Average scores were: editorial 2.6; technical 1.8. In other words, generally the comments were judged to be more valuable editorially than technically � this is partly due to several papers receiving comments only on editorial points. No editor reported that the comments influenced their decision on publication.

For a qualitative assessment, editors discussed the outcomes and reported the following views:

- A general sense of indifference from key contacts in their fields to the trial, and that it was like 'pulling teeth' to obtain any comments.

- Direct attempts to solicit comments met with very limited success.

- Biologist editors in particular were not surprised that authors in very competitive areas did not wish to be involved.

- Anecdotally, some authors were reluctant to take part due to fear of scooping and patent applications.

- Anecdotally, potential commenters felt that open peer review is 'nice to do' but did not want to provide any feedback on the papers on the server.

- Editors felt that most of the comments provided were of limited use for decision-making. Most were general comments, such as "nice work", rather than adding to the review process.

Author survey

All authors who participated in the trial were sent a survey. Sixty-four people were contacted and there were 27 responses (a 42% response rate).

- 20 respondents thought it was an interesting experiment.

- Of the 14 respondents who received open comments, four described them as 'not useful', six as 'somewhat useful', and four as 'very useful'.

- Although most respondents received no additional comments by taking part in the trial (such as e-mail or phone), those who did (five people) found them either 'useful' (four) or 'very useful' (one).

- Some authors expressed concern about possible scooping and others were disappointed that they didn't receive more comments.

- Of the 27 respondents, 11 expressed a preference for open peer review.

Conclusions

Despite the significant interest in the trial, only a small proportion of authors opted to participate. There was a significant level of expressed interest in open peer review among those authors who opted to post their manuscripts openly and who responded after the event, in contrast to the views of the editors. A small majority of those authors who did participate received comments, but typically very few, despite significant web traffic. Most comments were not technically substantive. Feedback suggests that there is a marked reluctance among researchers to offer open comments.

Nature and its publishers will continue to explore participative uses of the web. But for now at least, we will not implement open peer review.

This report is discussed in an Editorial in Nature 444, 971, 21/28 December 2006.

Visit our peer-to-peer blog to read and post comments about this article.