Chapter 5: Test Methods

The accredited test lab must design and perform procedures to test a voting system against the requirements outlined in Part 1. Test procedures must be designed and performed that address:

- Overall system capabilities;

- Pre-voting functions;

- Voting functions;

- Post-voting functions;

- System maintenance; and

- Transportation and storage.

The specific procedures to be used must be identified in the test plan prepared by the accredited test lab (see Part 2: Chapter 5: “Test Plan (test lab)”). These procedures must not rely on manufacturer testing as a substitute for independent testing.

5.1 Hardware

5.1.1 Electromagnetic compatibility (EMC) immunity

Testing of voting systems for EMC immunity will be conducted using the black-box testing approach, which "ignores the internal mechanism of a system or component and focuses solely on the outputs generated in response to selected inputs and execution conditions” (from [IEEE00]). It will be necessary to subject voting systems to a regimen of tests including most, if not all, disturbances that might be expected to impinge on the system, as recited in the requirements of Part 1.

Note: Some EMC immunity requirements have been established by Federal Regulations or for compliance with authorities having jurisdiction as a condition for offering equipment to the US market. In such cases, part of the requirements include affixing a label or notice stating that the equipment complies with the technical requirements, and therefore the VVSG does not suggest performing a redundant test.

5.1.1.1 Steady-state conditions

Testing laboratories that perform conformity assessments can be expected to have readily available a 120 V power supply from an energy service provider and access to a landline telephone service provider that will enable them to simulate the environment of a typical polling place.

5.1.1.2 Conducted disturbances immunity

Immunity to conducted disturbances will be demonstrated by appropriate industry-recognized tests and criteria for the ports involved in the operation of the voting system.

Adequacy of the product is demonstrated by satisfying specific “pass criteria” as outcome of the tests, which include not producing failure in the functions, firmware, or hardware.

The test procedure, test equipment, and test sequences will be based on some benchmark tests, and observation of the voltage and current waveforms during the tests, including (if relevant) detection of a “walking wounded” condition resulting from a severe but not immediately lethal stress that would produce a hardware failure some time later on.

Testing SHALL be conducted in accordance with the power port stress testing specified in IEEE Std C62.41.2™-2002 [IEEE02a] and IEEE Std C62.45™-2002 [IEEE02b].

Applies to: Electronic device

DISCUSSION

Both the IEEE and the IEC have developed test protocols for immunity of equipment power ports. In the case of a voting system intended for application in the United States, test equipment tailored to perform tests according to these two IEEE standards is readily available in tests laboratories, thus facilitating the process of compliance testing.

Source: New requirement

Testing SHALL be conducted in accordance with the power port stress of “Category B” to be applied by a Combination Waveform generator, in the powered mode, between line and neutral as well as between line and equipment grounding conductor.

Applies to: Electronic device

DISCUSSION

To satisfy this requirement, it is recommended that voting systems be capable of withstanding a 1.2/50 – 8/20 Combination Wave of 6 kV open-circuit voltage, 3 kA short-circuit current, with the following application points:

- Three surges, positive polarity at the positive peak of the line voltage;

- Three surges, negative polarity at the negative peak of the line voltage, line to neutral;

- Three surges, positive polarity at the positive peak of the line voltage, line to equipment grounding conductor; and

- Three surges, negative polarity at the negative peak of the line voltage, line to equipment grounding conductor.

The requirement of three successive pulses is based on the need to monitor any possible change in the equipment response caused by the application of the surges.

Source: [IEEE02a] Table 3

Testing SHALL be conducted in accordance with the power port stress of “Category B” to be applied by a “Ring Wave” generator, in the powered mode, between line and neutral as well as between line and equipment grounding conductor and neutral to equipment grounding conductor, at the levels shown below.

Applies to: Electronic device

DISCUSSION

Two different levels are recommended:

- 6 kV open-circuit voltage per Table 2 of [IEEE02a], applied as follows:

- Three surges, positive polarity at the positive peak of the line voltage, line to neutral;

- Three surges, negative polarity at the negative peak of the line voltage, line to neutral;

- Three surges, positive polarity at the positive peak of the line voltage, line to equipment grounding conductor; and

- Three surges, negative polarity at the negative peak of the line voltage, line to equipment grounding conductor.

- 3 kV open circuit voltage, per Table 5 of [IEEE02a], applied as follows:

- Three surges, positive polarity at the positive peak of the line voltage, neutral to equipment grounding conductor; and

- Three surges, negative polarity at the negative peak of the line voltage, neutral to equipment grounding conductor.

Source: [IEEE02a] Table 2 and Table 5

Testing SHALL be conducted in accordance with the recommendations of IEEE Std C62.41.2™-2002 [IEEE02a] and IEEE Std C62.45™-2002 [IEEE02b].

Applies to: Electronic device

DISCUSSION

Unlike the preceding two tests that are deemed to represent possibly destructive surges, the Electrical Fast Transient (EFT) Burst has been developed to demonstrate equipment immunity to non-destructive but highly disruptive events. Repetitive bursts of unidirectional 5/50 ns pulses lasting 15 ms and with 300 ms separation are coupled into terminals of the voting system by coupling capacitors for the power port and by the coupling clamp for the telephone connection cables.

Testing SHALL be conducted by applying gradual steps of overvoltage across the line and neutral terminals of the voting system unit.

Applies to: Electronic device

DISCUSSION

Testing for sag immunity within the context of EMC is not necessary in view of Requirement Part 1: 6.3.4.3-A.4 that the voting system be provided with a two-hour back-up capability (to be verified by inspection). Testing for swells and permanent overvoltage conditions is necessary to ensure immunity to swells (no loss of data) and to permanent overvoltages (no overheating or operation of a protective fuse).

A) Short-duration Swells

As indicated by the ITI Curve [ITIC00], it is necessary to ensure that voting systems not be disturbed by a temporary overvoltage of 120 % normal line voltage lasting from 3 ms to 0.5 s. (Shorter durations fall within the definition of “surge.”)

B) Permanent Overvoltage

As indicated by the ITI Curve [ITIC00], it is necessary to ensure that voting systems not be disturbed nor overheat for a permanent overvoltage of 110 % of the nominal 120 V rating of the voting system.

Source: New requirement

Testing SHALL be conducted in accordance with the telephone port stress testing specified in industry-recognized standards developed for telecommunications in general, particularly equipment connected to landline telephone service providers.

Applies to: Electronic device

DISCUSSION

Voting systems, by being connected to the outside service provider via premises wiring, can be exposed to a variety of electromagnetic disturbances. These have been classified as emissions from adjacent equipment, lightning-induced, power-fault induced, power contact, Electrical Fast Transient (EFT), and steady-state induced voltage.

Source: New requirement

Testing SHALL be conducted in accordance with the emissions limits stipulated for other equipment of the voting system connected to the premises wiring of the polling place.

Applies to: Electronic device

DISCUSSION

Emission limits for the power port of voting systems are discussed in Requirement Part 1: 6.3.4.2-B.1 with reference to numerical values stipulated in [Telcordia06]. EMC of a complete voting system installed in a polling facility thus implies that individual components of voting systems must demonstrate immunity against disturbances at a level equal to the limits stipulated for emissions of adjacent pieces of equipment.

Source: [Telcordia06] subclause 3.2.3

Testing SHALL be conducted in accordance with the requirements of Telcordia GR-1089 [Telcordia06] for simulation of lightning.

Applies to: Electronic device

DISCUSSION

Telcordia GR-6089 [Telcordia06] lists two types of tests, respectively (First-Level Lightning Surge Test and Second-Level Lightning Surge Test), as follows:

A) First-Level Lightning Surge Test

The particular voting system piece of equipment under test (generally referred to as “EUT”) is placed in a complete operating system performing its intended functions, while monitoring proper operation, with checks performed before and after the surge sequence. Manual intervention or power cycling is not permitted before verifying proper operation of the voting system.

B) Second-Level Lightning Surge Test

Second-level lightning surge test is performed as a fire hazard indicator with cheesecloth applied to the particular EUT.

This second-level test, which can be destructive, may be performed with the EUT operating at a sub-assembly level equivalent to the standard system configuration, by providing dummy loads or associated equipment equivalent to what would be found in the complete voting system, as assembled in the polling place.

Source: [Telcordia06] subclauses 4.6.7 and 4.6.8

Testing SHALL be conducted in accordance with the requirements of Telcordia GR-1089 [Telcordia06] for simulation power-faults-induced events.

Applies to: Electronic device

DISCUSSION

Tests that can be used to assess the immunity of voting systems to power fault-induced disturbances are described in detail in [Telcordia06] for several scenarios and types of equipment, each involving a specific configuration of the test generator, test circuit, and connection of the equipment.

Source: [Telcordia06] subclause 4.6

Testing SHALL be conducted in accordance with the requirements of Telcordia GR-1089 [Telcordia06] for simulation of power-contact events.

Applies to: Electronic device

DISCUSSION

Tests for power contact (sometimes called “power cross”) immunity of voting systems immunity are described in detail in [Telcordia06] for several scenarios and types of equipment, each involving a specific configuration of the test generator, test circuit, and connection of the equipment.

Source: [Telcordia06] subclause 4.6

Testing SHALL be conducted in accordance with the requirements of Telcordia GR-1089 [Telcordia06] for application of the EFT Burst.

Applies to: Electronic device

DISCUSSION

Telcordia GR-1089 [Telcordia06] calls for performing EFT tests but refers to [ISO4b] for details of the procedure. While EFT generators, per the IEC standard [ISO4b], offer the possibility of injecting the EFT burst into a power port by means of coupling capacitors, the other method described by the IEC standard, the so-called “capacitive coupling clamp,” would be the recommended method for coupling the burst into leads connected to the telephone port of the voting system under test. However, because the leads (subscriber wiring premises) vary from polling place to polling place, a more repeatable test is direct injection at the telephone port via the coupling capacitors.

Source: [ISO04b] clause 6

Testing SHALL be conducted in accordance with the requirements of Telcordia GR-1089 [Telcordia06] for simulation of steady-state induced voltages.

Applies to: Electronic device

DISCUSSION

Telcordia GR-1089 [Telcordia06] describes two categories of tests, depending on the length of loops, the criterion being a loop length of 20 kft (sic). For metric system units, that criterion may be considered to be 6 km, a distance that can be exceeded for some low-density rural or suburban locations of a polling place. Therefore, the test circuit to be used should be the one applying the highest level of induced voltage.

Source: [Telcordia06] sub-clause 5.2

Inherent immunity against data corruption and hardware damage caused by interaction between the power port and the telephone port SHALL be demonstrated by applying a 0.5 µs – 100 kHz Ring wave between the power port and the telephone port.

Applies to: Electronic device

DISCUSSION

Although IEEE is in the process of developing a standard (IEEE PC62.50) to address the interaction between the power port and communications port, no standard has been promulgated at this date, but published papers in peer-reviewed literature [Key94] suggest that a representative surge can be the Ring Wave of [IEEE02a] applied between the equipment grounding conductor terminal of the voting system component under test and each of the tip and ring terminals of the voting system components intended to be connected to the telephone network.

Inherent immunity of the voting system might have been achieved by the manufacturer, as suggested in PC62.50, by providing a surge-protective device between these terminals that will act as a temporary bond during the surge, a function which can be verified by monitoring the voltage between the terminals when the surge is applied.

The IEEE project is IEEE PC62.50 "Draft Standard for Performance Criteria and Test Methods for Plug-in, Portable, Multiservice (Multiport) Surge Protective Devices for Equipment Connected to a 120/240 V Single Phase Power Service and Metallic Conductive Communication Line(s)." This is an unapproved standard, with estimated approval date 2008.

Source: New requirement

5.1.1.3 Radiated disturbances immunity

Testing SHALL be conducted according to procedures in CISPR 24 [ANSI97], and either IEC 61000-4-3 [ISO06a] or IEC 61000-4-21:2003 [ISO06d].

Applies to: Electronic device

DISCUSSION

IEC 61000-4-3 [ISO06a] specifies using an absorber lined shielded room (fully or semi anechoic chamber) to expose the device-under-test. An alternative procedure is the immunity testing procedures of IEC [ISO06d], performed in a reverberating shielded room (radio-frequency reverberation chamber).

Testing for electromagnetic fields below 80 MHz SHALL be conducted according to procedures defined in IEC 61000-4-6 [ISO06b].

Testing SHALL be conducted in accordance with the recommendations of ANSI Std C63.16 [ANSI93], applying an air discharge or a contact discharge according to the nature of the enclosure of the voting system.

Applies to: Electronic device

DISCUSSION

Electrostatic discharges, simulated by a portable ESD simulator, involve an air discharge that can upset the logic operations of the circuits, depending on their status. In the case of a conducting enclosure, the resulting discharge current flowing in the enclosure can couple with the circuits and also upset the logic operations. Therefore, it is necessary to apply a sufficient number of discharges to significantly increase the probability that the circuits will be exposed to the interference at the time of the most critical transition of the logic. This condition can be satisfied by using a simulator with repetitive discharge capability while a test operator interacts with the voting terminal, mimicking the actions of a voter or initiating a data transfer from the terminal to the local tabulator.

5.1.2 Electromagnetic compatibility (EMC) emissions limits

Testing of voting systems for EMC emission limits will be conducted using the black box testing approach, which "ignores the internal mechanism of a system or component and focuses solely on the outputs generated in response to selected inputs and execution conditions” [IEEE00].

It will be necessary to subject voting systems to a regimen of tests to demonstrate compliance with emission limits. The tests should include most, if not all disturbances that might be expected to be emitted from the implementation under test, unless compliance with mandatory limits such as FCC regulations is explicitly stated for the implementation under test.

5.1.2.1 Conducted emissions limits

5.1.2.1.1 Power port – low/high frequency ranges

As discussed in Part 1: 6.3.5 “Electromagnetic Compatibility (EMC) emission limits”, the relative importance of low-frequency harmonic emissions and the current drawn by other loads in the polling place will result in a negligible percentage of harmonics at the point of common connection, as discussed in [IEEE92]. Thus, no test is required to assess the harmonic emission of a voting station.

High-frequency emission limits have been established by Federal Regulations [FCC07] as a condition for offering equipment to the US market. In such cases, part of the requirements include affixing a label or notice stating that the equipment complies with the stipulated limits. Therefore, the VVSG does not suggest performing a redundant test.

5.1.2.1.2 Communications (Telephone) port

Unintended conducted emissions from a voting system telephone port SHALL be tested for its analog voice band leads in the metallic as well as its longitudinal voltage limits.

Applies to: Voting system

DISCUSSION

Telcordia GR-1089 [Telcordia06] stipulates limits for both the common mode (longitudinal) and differential mode (metallic) over a frequency range defined by maximum voltage and terminating impedances.

Source: [Telcordia06] subclause 3.2.3

5.1.2.2 Radiated emissions

Compliance with emission limits SHALL be documented on the hardware in accordance with the stipulations of FCC Part 15, Class B [FCC07].

Applies to: Voting system

Source: [FCC07]

5.1.3 Other (non-EMC) industry-mandated requirements

5.1.3.1 Dielectric stresses

Testing SHALL be conducted in accordance with the stipulations of industry-consensus telephone requirements of Telcordia GR-1089 [Telcordia06].

Applies to: Voting system

Source: [Telcordia06] Section 4.9.5

5.1.3.2 Leakage via grounding port

Simple verification of an acceptable low leakage current SHALL be performed by powering the voting system under test via a listed Ground-Fault Circuit Interrupter (GFCI) and noting that no tripping of the GFCI occurs when the voting system is turned on.

Applies to: Voting system

Source: New requirement

5.1.3.3 Safety

The presence of a listing label (required by authorities having jurisdiction) referring to a safety standard, such as [UL05], makes repeating the test regimen unnecessary. Details on the safety considerations are addressed in Part 1: 3.2.8.2 “Safety”.

5.1.3.4 Label of compliance

Some industry mandated requirements require demonstration of compliance, while for others the manufacturer affixes of label of compliance, which then makes repeating the tests unnecessary and economically not justifiable.

5.1.4 Non-operating environmental testing

This type of testing is designed to assess the robustness of voting systems during storage between elections and during transporting between the storage facility and the polling place.

Such testing is intended to simulate exposure to physical shock and vibration associated with handling and transportation of voting systems between a jurisdiction's storage facility and polling places. The testing additionally simulates the temperature and humidity conditions that may be encountered during storage in an uncontrolled warehouse environment or precinct environment. The procedures and conditions of this testing correspond to those of MIL-STD-810D, "Environmental Test Methods and Engineering Guidelines."

All voting systems SHALL be tested in accordance with the appropriate procedures of MIL-STD-810D, "Environmental Test Methods and Engineering Guidelines'' [MIL83].

Applies to: Voting system

Source: [VVSG2005]

All voting systems SHALL be tested in accordance with MIL-STD-810D, Method 516.3. Procedure VI.

Applies to: Voting system

DISCUSSION

This test simulates stresses faced during maintenance and repair.

Source: [VVSG2005]

All voting systems SHALL be tested in accordance with MIL-STD-810D, Method 514.3, Category 1 – Basic Transportation, Common Carrier.

Applies to: Voting system

DISCUSSION

This test simulates stresses faced during transport between storage locations and polling places.

Source: [VVSG2005]

All voting systems SHALL be tested in accordance with MIL-STD-810D: Method 502.2, Procedure I – Storage and Method 501.2, Procedure I – Storage. The minimum temperature SHALL be -4 degrees F, and the maximum temperature SHALL be 140 degrees F.

Applies to: Voting system

DISCUSSION

This test simulates stresses faced during storage.

Source: [VVSG2005]

All voting systems SHALL be tested in accordance with humidity testing specified by MIL-STD-810D: Method 507.2, Procedure II – Natural (Hot-Humid), with test conditions that simulate a storage environment.

Applies to: Voting system

DISCUSSION

This test is intended to evaluate the ability of voting equipment to survive exposure to an uncontrolled temperature and humidity environment during storage.

Source: [VVSG2005]

5.1.5 Operating environmental testing

This type of testing is designed to assess the robustness of voting systems during operation.

All voting systems SHALL be tested in accordance with the appropriate procedures of MIL-STD-810D, "Environmental Test Methods and Engineering Guidelines'' [MIL83].

Applies to: Voting system

Source: [VVSG2005]

All voting systems SHALL be tested according to the low temperature and high temperature testing specified by MIL-STD-810-D [MIL83]: Method 502.2, Procedure II -- Operation and Method 501.2, Procedure II -- Operation, with test conditions that simulate system operation.

Applies to: Voting system

Source: [VVSG2005]

All voting systems SHALL be tested according to the humidity testing specified by MIL-STD-810-D: Method 507.2, Procedure II – Natural (Hot –Humid), with test conditions that simulate system operation.

Applies to: Voting system

Source: New requirement

5.2 Functional Testing

Functional testing is performed to confirm the functional capabilities of a voting system. The accredited test lab designs and performs procedures to test a voting system against the requirements outlined in Part 1. Additions or variations in testing may be appropriate depending on the system's use of specific technologies and configurations, the system capabilities, and the outcomes of previous testing.

Functional tests cover the full range of system operations. They include tests of fully integrated system components, internal and external system interfaces, usability and accessibility, and security. During this process, election management functions, ballot-counting logic, and system capacity are exercised.

The accredited test lab tests the interface of all system modules and subsystems with each other against the manufacturer's specifications. For systems that use telecommunications capabilities, components that are located at the poll site or separate vote counting site are tested for effective interface, accurate vote transmission, failure detection, and failure recovery. For voting systems that use telecommunications lines or networks that are not under the control of the manufacturer (e.g., public telephone networks), the accredited test lab tests the interface of manufacturer-supplied components with these external components for effective interface, vote transmission, failure detection, and failure recovery.

The security tests focus on the ability of the system to detect, prevent, log, and recover from a broad range of security risks. The range of risks tested is determined by the design of the system and potential exposure to risk. Regardless of system design and risk profile, all systems are tested for effective access control and physical data security. For systems that use public telecommunications networks to transmit election management data or election results (such as ballots or tabulated results), security tests are conducted to ensure that the system provides the necessary identity-proofing, confidentiality, and integrity of transmitted data. The tests determine if the system is capable of detecting, logging, preventing, and recovering from types of attacks known at the time the system is submitted for qualification. The accredited test lab may meet these testing requirements by confirming the proper implementation of proven commercial security software.

5.2.1 General guidelines

5.2.1.1 General test template

Most tests will follow this general template. Different tests will elaborate on the general template in different ways, depending on what is being tested.

- Establish initial state (clean out data from previous tests, verify resident software/firmware);

- Program election and prepare ballots and/or ballot styles;

- Generate pre-election audit reports;

- Configure voting devices;

- Run system readiness tests;

- Generate system readiness audit reports;

- Precinct count only:

- Open poll;

- Run precinct count test ballots; and

- Close poll.

- Run central count test ballots (central count / absentee ballots only);

- Generate in-process audit reports;

- Generate data reports for the specified reporting contexts;

- Inspect ballot counters; and

- Inspect reports.

5.2.1.2 General pass criteria

The test lab need only consider tests that apply to the classes specified in the implementation statement, including those tests that are designated for all systems. The test verdict for all other tests SHALL be Not Applicable.

Applies to: Voting system

If the documented assumptions for a given test are not met, the test verdict SHALL be Waived and the test SHALL NOT be executed.

Applies to: Voting system

If the test lab is unable to execute a given test because the system does not support functionality that is required per the implementation statement or is required for all systems, the test verdict SHALL be Fail.

Applies to: Voting system

A demonstrable violation of any applicable requirement of the VVSG during the execution of any test SHALL result in a test verdict of Fail.

Applies to: Voting system

DISCUSSION

The nonconformities observed during a particular test do not necessarily relate to the purpose of that test. This requirement clarifies that a nonconformity is a nonconformity, regardless of whether it relates to the test purpose.

See Part 3: 2.5.5 “Test practices” for directions on termination, suspension, and resumption of testing following a verdict of Fail.

5.2.2 Structural coverage (white-box testing)

This section specifies requirements for "white-box" (glass-box, clear-box) testing of voting system logic.

For voting systems that reuse components or subsystems from previously tested systems, the test lab may, per Requirement Part 2: 5.1-D, find it unnecessary to repeat instruction, branch, and interface testing on the previously tested, unmodified components. However, the test lab must fully test all new or modified components and perform what regression testing is necessary to ensure that the complete system remains compliant.

The test lab SHALL execute tests that provide coverage of every accessible instruction and branch outcome in application logic and border logic.

Applies to: Voting system

DISCUSSION

This is not exhaustive path testing, but testing of paths sufficient to cover every instruction and every branch outcome.

Full coverage of third-party logic is not mandated because it might include a large amount of code that is never used by the voting application. Nevertheless, the relevant portions of third-party logic should be tested diligently.

There should be no inaccessible code in application logic and border logic other than defensive code (including exception handlers) that is provided to defend against the occurrence of failures and "can't happen" conditions that cannot be reproduced and should not be reproducible by a test lab.

Source: Clarification of [VSS2002]/[VVSG2005] II.6.2.1 and II.A.4.3.3

The test lab SHALL execute tests that test the interfaces of all application logic and border logic modules and subsystems, and all third-party logic modules and subsystems that are in any way used by application logic or border logic.

The test lab SHALL define pass criteria using the VVSG (for standard functionality) and the manufacturer-supplied system documentation (for implementation-specific functionality) to determine acceptable ranges of performance.

Applies to: Voting system

DISCUSSION

Because white-box tests are designed based on the implementation details of the voting system, there can be no canonical test suite. Pass criteria must always be determined by the test lab based on the available specifications.

Since the nature of the requirements specified by the manufacturer-supplied system documentation is unknown, conformity for implementation-specific functionality may be subject to interpretation. Nevertheless, egregious disagreements between the behavior of the system and the behavior specified by the manufacturer should lead to a defensible adverse finding.

Source: [VSS2002]/[VVSG2005] II.A.4.3.3

5.2.3 Functional coverage (black-box testing)

All voting system logic, including any embedded in COTS components, is subject to functional testing.

For voting systems that reuse components or subsystems from previously tested systems, the test lab may, per Requirement Part 2: 5.1-D, find it unnecessary to repeat functional testing on the previously tested, unmodified components. However, the test lab must fully test all new or modified components and perform what regression testing is necessary to ensure that the complete system remains compliant.

The test lab SHALL execute test cases that provide coverage of every applicable, mandatory ("SHALL"), functional requirement of the VVSG.

Applies to: Voting system

DISCUSSION

Depending upon the design and intended use of the voting system, all or part of the functions listed below must be tested:

- Ballot preparation subsystem;

- Test operations performed prior to, during, and after processing of ballots, including:

- Logic tests to verify interpretation of ballot styles, and recognition of precincts to be processed;

- Accuracy tests to verify ballot reading accuracy;

- Status tests to verify equipment statement and memory contents;

- Report generation to produce test output data; and

- Report generation to produce audit data records.

- Procedures applicable to equipment used in the polling place for:

- Opening the polls and enabling the acceptance of ballots;

- Maintaining a count of processed ballots;

- Monitoring equipment status;

- Verifying equipment response to operator input commands;

- Generating real-time audit messages;

- Closing the polls and disabling the acceptance of ballots;

- Generating election data reports;

- Transfer of ballot counting equipment, or a detachable memory module, to a central counting location; and

- Electronic transmission of election data to a central counting location.

- Procedures applicable to equipment used in a central counting place:

- Initiating the processing of a ballot deck, programmable memory device, or other applicable media for one or more precincts;

- Monitoring equipment status;

- Verifying equipment response to operator input commands;

- Verifying interaction with peripheral equipment, or other data processing systems;

- Generating real-time audit messages;

- Generating precinct-level election data reports;

- Generating summary election data reports;

- Transfer of a detachable memory module to other processing equipment;

- Electronic transmission of data to other processing equipment; and

- Producing output data for interrogation by external display devices.

- Security controls have been implemented, are free of obvious errors, and operating as described in security documentation.

- Cryptography;

- Access control;

- Setup inspection;

- Software installation;

- Physical security;

- System integrity management;

- Communications;

- Audit, electronic, and paper records; and

- System event logging.

This requirement is derived from [VSS2002]/[VVSG2005] II.A.4.3.4, "Software Functional Test Case Design," in lieu of a canonical functional test suite. Once a complete, canonical test suite is available, the execution of that test suite will satisfy this requirement. For reproducibility, use of a canonical test suite is preferable to development of custom test suites

In those few cases where requirements specify "fail safe" behaviors in the event of freak occurrences and failures that cannot be reproduced and should not be reproducible by a test lab, the requirement is considered covered if the test campaign concludes with no occurrences of an event to which the requirement would apply. However, if a triggering event occurs, the test lab must assess conformity to the requirement based on the behaviors observed.

The test lab SHALL execute tests to verify that the system and its constituent devices are able to operate correctly at the limits specified in the implementation statement; for example:

- Maximum number of ballots;

- Maximum number of ballot positions;

- Maximum number of ballot styles;

- Maximum number of contests;

- Maximum vote total (counter capacity);

- Maximum number of provisional, challenged, or review-required ballots;

- Maximum number of contest choices per contest; and

- Any similar limits that apply.

Applies to: Voting system

DISCUSSION

See Part 1: 2.4 ”implementation statement”. Every kind of limit is not applicable to every kind of device. For example, EBMs may not have a limit on the number of ballots they can handle.

If an implementation limit is sufficiently great that it cannot be verified through operational testing without severe expense and hardship, the test lab SHALL attest this in the test report and substitute a combination of design review, logic verification, and operational testing to a reduced limit.

Applies to: Voting system

DISCUSSION

For example, since counter capacity can easily be designed to 232 and beyond without straining current technology, some reasonable limit for required operational testing is needed. However, it is preferable to test the limit operationally if there is any way to accomplish it.

The test lab SHALL execute tests to verify that the system is able to respond gracefully to attempts to process more than the expected number of ballots per precinct, more than the expected number of precincts, higher than expected volume or ballot tabulation rate, or any similar conditions that tend to overload the system's capacity to process, store, and report data.

Applies to: Voting system

DISCUSSION

In particular, Requirement Part 1: 7.5.6-A should be verified through operational testing if the limit is practically testable.

The test lab SHALL conduct a volume test in conditions approximating normal use in an election. The entire system SHALL be tested, from election definition through the reporting and auditing of final results.

Applies to: Voting system

DISCUSSION

Data collected during this test contribute substantially to the evaluations of reliability, accuracy, and misfeed rate (see Part 3: 5.3 “Benchmarks”).

Source: [CA06]

For systems that include VEBDs, a minimum of 100 VEBDs SHALL be tested and a minimum of 110 ballots SHALL be cast manually on each VEBD.

Applies to: VEBD

DISCUSSION

For vote-by-phone systems, this would mean having 100 concurrent callers, not necessarily 100 separate servers to answer the calls, if one server suffices to handle many incoming calls simultaneously. Other client-server systems would be analogous.

To ensure that the correct results are known, test voters should be furnished with predefined scripts that specify the votes that they should cast.

Source: [CA06]

For systems that include precinct tabulators, a minimum of 50 precinct tabulators SHALL be tested. No fewer than 10000 test ballots SHALL be used. No fewer than 400 test ballots SHALL be counted by each precinct tabulator.

Applies to: Precinct tabulator

DISCUSSION

[GPO90] 7.5 specified, "The total number of ballots to be processed by each precinct counting device during these tests SHALL be at least ten times the number of ballots expected to be counted on a single device in an election (500 to 750), but in no case less than 5,000."

It is permissible to reuse test ballots. However, all 10000 test ballots must be used at least once, and each precinct tabulator must count at least 400 (distinct) ballots. Cycling 100 ballots 4 times through a given tabulator would not suffice. See also, Requirement Part 3: 2.5.3-A (Complete system testing).

Source: [CA06]

For systems that include central tabulators, a minimum of 2 central tabulators SHALL be tested. No fewer than 10000 test ballots SHALL be used. A minimum ballot volume of 75000 (total across all tabulators) SHALL be tested, and no fewer than 10000 test ballots SHALL be counted by each central tabulator.

Applies to: Central tabulator

DISCUSSION

[CA06] did not specify test parameters for central tabulators. The test parameters specified here are based on the smallest case provided for central count systems in Exhibit J-1 of Appendix J, Acceptance Test Guidelines for P&M Voting Systems, of [GPO90]. An alternative would be to derive test parameters from the test specified in [GPO90] 7.3.3.2 and (differently) in [VSS2002]/[VVSG2005] II.4.7.1. A test of duration 163 hours with a ballot tabulation rate of 300 / hour yields a total ballot volume of 48900—presumably, but not necessarily, on a single tabulator.

[GPO90] 7.5 specified, "The number of test ballots for each central counting device SHALL be at least thirty times the number that would be expected to be voted on a single precinct count device, but in no case less than 15,000."

The ballot volume of 75000 is the total across all tabulators; so, for example, one could test 25000 ballots on each of 3 tabulators. The test deck must contain at least 10000 ballots. A deck of 15000 ballots could be cycled 5 times to generate the required total volume. See also, Requirement Part 3: 2.5.3-A (Complete system testing).

Source: [GPO90] Exhibit J-1 (Central Count)

The testing of MCOS SHALL include marks filled according to the recommended instructions to voters, imperfect marks as specified in Requirement Part 1: 7.7.5-D, and ballots with folds that do not intersect with voting targets.

Applies to: MCOS

Source: Numerous public comments and issues

The test lab SHALL execute tests to verify that the system is able to produce and utilize ballots in all of the languages that are claimed to be supported in the implementation statement.

The test lab SHALL execute tests to verify that the system is able to detect, handle, and recover from abnormal input data, operator actions, and conditions.

The test lab SHALL execute tests to verify that the system detects and handles operator errors such as inserting control cards out of sequence or attempting to install configuration data that are not properly coded for the device.

Applies to: Voting system

Source: [GPO90] 8.8

The test lab SHALL execute tests to check that the system is able to respond to hardware malfunctions in a manner compliant with the requirements of Part 1: 6.4.1.9 “Recovery”.

Applies to: Voting system

DISCUSSION

This capability may be checked by any convenient means (e.g., power off, disconnect a cable, etc.) in any equipment associated with ballot processing.

This test pertains to "fail safe" behaviors as discussed in Requirement Part 3: 5.2.3-A. The test lab may be unable to produce a triggering event, in which case the test is passed by default.

Source: [GPO90] 8.5

For systems that use networking and/or telecommunications capabilities, the test lab SHALL execute tests to check that the system is able to detect, handle, and recover from interference with or loss of the communications link.

Applies to: Voting system

DISCUSSION

This test pertains to "fail safe" behaviors as discussed in Requirement Part 3: 5.2.3-A. The test lab may be unable to produce a triggering event, in which case the test is passed by default.

The test lab SHALL execute tests that provide coverage of the full range of system functionality specified in the manufacturer's documentation, including functionality that exceeds the specific requirements of the VVSG.

Applies to: Voting system

DISCUSSION

Since the nature of the requirements specified by the manufacturer-supplied system documentation is unknown, conformity for implementation-specific functionality may be subject to interpretation. Nevertheless, egregious disagreements between the behavior of the system and the behavior specified by the manufacturer should lead to a defensible adverse finding.

The test lab SHALL prepare a detailed matrix of VVSG requirements, system functions, and the tests that exercise them.

Pass criteria for tests that are adopted from a canonical functional test suite are defined by that test suite. For all other tests, the test lab SHALL define pass criteria using the VVSG (for standard functionality) and the manufacturer-supplied system documentation (for implementation-specific functionality) to determine acceptable ranges of performance.

Applies to: Voting system

DISCUSSION

Since the nature of the requirements specified by the manufacturer-supplied system documentation is unknown, conformity for implementation-specific functionality may be subject to interpretation. Nevertheless, egregious disagreements between the behavior of the system and the behavior specified by the manufacturer should lead to a defensible adverse finding.

5.3 Benchmarks

5.3.1 General method

Reliability, accuracy, and misfeed rate are measured using ratios, each of which is the number of some kind of event (failures, errors, or misfeeds, respectively) divided by some measure of voting volume. The test method discussed here is applicable generically to all three ratios; hence, this discussion will refer to events and volume without specifying a particular definition of either.

By keeping track of the number of events and the volume over the course of a test campaign, one can trivially calculate the observed cumulative event rate by dividing the number of events by the volume. However, the observed event rate is not necessarily a good indication of the true event rate. The true event rate describes the expected performance of the system in the field, but it cannot be observed in a test campaign of finite duration, using a finite-sized sample. Consequently, the true event rate can only be estimated using statistical methods.

In accordance with the current practice in voting system testing, the system submitted for testing is assumed to be a representative sample, so the variability of devices of the same type is out of scope.

The test method makes the simplifying assumption that events occur in a Poisson distribution, which means that the probability of an event occurring is assumed to be the same for each unit of volume processed. In reality, there are random events that satisfy this assumption but there are also nonrandom events that do not. For example, a logic error in tabulation software might be triggered every time a particular voting option is used. Consequently, a test campaign that exercised that voting option often would be more likely to indicate rejection based on reliability or accuracy than a test campaign that used different tests. However, since these VVSG require absolute correctness of tabulation logic, the only undesirable outcome is the one in which the system containing the logic error is accepted. Other evaluations specified in these VVSG, such as functional testing and logic verification, are better suited to detecting systems that produce nonrandom errors and failures. Thus, when all specified evaluations are used together, the different test method complement each other and the limitation of this particular test method with respect to nonrandom events is not bothersome.

For simplicity, all three cases (failures, errors, and misfeeds) are modeled using a continuous distribution (Poisson) rather than a discrete distribution (Binomial). In this application, where the probability of an event occurring within a unit of volume is small, the difference in results from the discrete and continuous models is negligible.

The problem is approached through classical hypothesis testing. The null hypothesis (H0) is that the true event rate, rt, is less than or equal to the benchmark event rate, rb (which means that the system is conforming).

The alternative hypothesis (H1) is that the true event rate, rt, is greater than the benchmark event rate, rb (which means that the system is non-conforming).

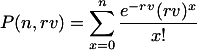

Assuming an event rate of r, the probability of observing n or less events for volume v is the value of the Poisson cumulative distribution function.

Let no be the number of events observed during testing and vo be the volume produced during testing. The probability α of rejecting the null hypothesis when it is in fact true is limited to be less than 0.1. Thus, H0 is rejected only if the probability of no or more events occurring given a (marginally) conforming system is less than 0.1. H0 is rejected if 1−P(no−1,rbvo)<0.1, which is equivalent to P(no−1,rbvo)>0.9. This corresponds to the 90th percentile of the distribution of the number of events that would be expected to occur in a marginally conforming system.

If at the conclusion of the test campaign the null hypothesis is not rejected, this does not necessarily mean that conformity has been demonstrated. It merely means that there is insufficient evidence to demonstrate non-conformity with 90 % confidence.

Calculating what has been demonstrated with 90 % confidence, after the fact, is completely separate from the test described above, but the logic is similar. Suppose there are no observed events after volume vo. Solving the equation P(no,rdvo)=0.1 for rd finds the "demonstrated rate" rd such that if the true rate rt were greater than rd, then the probability of having no or fewer events would be less than 0.1. The value of rd could be greater or less than the benchmark event rate rb mentioned above.

Please note that the length of testing is determined in advance by the approved test plan. To adjust the length of testing based on the observed performance of the system in the tests already executed would bias the results and is not permitted. A Probability Ratio Sequential Test (PRST) [Wald47][Epstein55][MIL96] as was specified in previous versions of these VVSG varies the length of testing without introducing bias, but practical difficulties result when the length of testing determined by the PRST disagrees with the length of testing that is otherwise required by the test plan.

5.3.2 Critical values

For a fixed probability p and a fixed value of n, the value of rv satisfying P(n,rv)=p is a constant. Part 3: Table 5-1 provides the values of rv for p=0.1 and p=0.9 for 0≤n≤750.

Given no observed events after volume vo, the demonstrated event rate rd is found by solving P(no,rdvo)=0.1 for rd. The pertinent factor is in the second column (p=0.1) in the row for n=no; dividing this factor by vo yields rd. For example, a volume of 600 with no events demonstrates an event rate of 2.302585/600, or 3.837642×10−3.

Since the condition for rejecting H0 is P(no−1,rbvo)>0.9, the critical value vc, which is the minimum volume at which H0 is not rejected for no observed events and event rate benchmark rb, is found by solving P(no−1,rbvc)=0.9 for vc. The pertinent factor is in the third column (p=0.9) in the row for n=no−1; dividing this factor by rb yields vc. For example, if a test with event rate benchmark rb=10−4 resulted in one observed event, then the system would be rejected unless the actual volume was at least 0.1053605/10−4, or 105.3605. Where the measurement of volume is discrete rather than continuous, one would round up to the next integer.

The values in Part 3: Table 5-1 were generated by the following script and Octave[2] version 2.1.73.

silent_functions=1

# Function for the root finder to zero. fsolve won't pass extra

# parameters to the function being solved, so we must use globals.

# nGlobal is number of events; pGlobal is probability.

function rvRootFn = rvRoot (rv)

global nGlobal pGlobal

rvRootFn = poisson_cdf (nGlobal, rv) - pGlobal

endfunction

# Find rv given n and p. To initialize the root finder, provide

# startingGuess that is greater than zero and approximates the

# answer.

function rvFn = rv (n, p, startingGuess)

global nGlobal pGlobal

nGlobal = n

pGlobal = p

startingGuess > 0 || error ("bad starting guess")

[rvFn, info] = fsolve ("rvRoot", startingGuess)

if (info != 1)

perror ("fsolve", info)

endif

endfunction

function table

printf (" n P=0.1 P=0.9\n")

for n = 0:750

rv01 = rv (n, 0.1, -4.9529e-05*n*n + 1.0715*n + 2.302585093)

rv09 = rv (n, 0.9, 4.9522e-05*n*n + 0.9285*n + 0.105360516)

printf ("%3u %.6e %.6e\n", n, rv01, rv09)

endfor

endfunction

fsolve_options ("tolerance", 5e-12)

table

Table 5-1 Factors for calculation of critical values

| n | rv satisfying P(n,rv)=0.1 | rv satisfying P(n,rv)=0.9 | n | rv satisfying P(n,rv)=0.1 | rv satisfying P(n,rv)=0.9 | n | rv satisfying P(n,rv)=0.1 | rv satisfying P(n,rv)=0.9 |

| 0 | 2.302585 | 0.1053605 | 251 | 272.5461 | 231.8821 | 501 | 530.9192 | 473.509 |

| 1 | 3.88972 | 0.5318116 | 252 | 273.5864 | 232.8418 | 502 | 531.9478 | 474.4804 |

| 2 | 5.32232 | 1.102065 | 253 | 274.6267 | 233.8015 | 503 | 532.9764 | 475.4519 |

| 3 | 6.680783 | 1.74477 | 254 | 275.6669 | 234.7613 | 504 | 534.0049 | 476.4233 |

| 4 | 7.99359 | 2.432591 | 255 | 276.707 | 235.7212 | 505 | 535.0334 | 477.3948 |

| 5 | 9.274674 | 3.151898 | 256 | 277.747 | 236.6812 | 506 | 536.0619 | 478.3663 |

| 6 | 10.53207 | 3.894767 | 257 | 278.787 | 237.6412 | 507 | 537.0904 | 479.3379 |

| 7 | 11.77091 | 4.656118 | 258 | 279.8269 | 238.6013 | 508 | 538.1188 | 480.3094 |

| 8 | 12.99471 | 5.432468 | 259 | 280.8667 | 239.5615 | 509 | 539.1472 | 481.2811 |

| 9 | 14.20599 | 6.221305 | 260 | 281.9064 | 240.5218 | 510 | 540.1755 | 482.2527 |

| 10 | 15.40664 | 7.020747 | 261 | 282.946 | 241.4822 | 511 | 541.2039 | 483.2243 |

| 11 | 16.59812 | 7.829342 | 262 | 283.9856 | 242.4426 | 512 | 542.2322 | 484.196 |

| 12 | 17.78159 | 8.645942 | 263 | 285.0251 | 243.4031 | 513 | 543.2605 | 485.1677 |

| 13 | 18.95796 | 9.469621 | 264 | 286.0645 | 244.3637 | 514 | 544.2887 | 486.1395 |

| 14 | 20.12801 | 10.29962 | 265 | 287.1039 | 245.3243 | 515 | 545.317 | 487.1113 |

| 15 | 21.29237 | 11.1353 | 266 | 288.1432 | 246.2851 | 516 | 546.3452 | 488.0831 |

| 16 | 22.45158 | 11.97613 | 267 | 289.1824 | 247.2459 | 517 | 547.3734 | 489.0549 |

| 17 | 23.60609 | 12.82165 | 268 | 290.2215 | 248.2067 | 518 | 548.4015 | 490.0267 |

| 18 | 24.75629 | 13.67148 | 269 | 291.2605 | 249.1677 | 519 | 549.4296 | 490.9986 |

| 19 | 25.90253 | 14.52526 | 270 | 292.2995 | 250.1287 | 520 | 550.4577 | 491.9705 |

| 20 | 27.0451 | 15.38271 | 271 | 293.3384 | 251.0898 | 521 | 551.4858 | 492.9424 |

| 21 | 28.18427 | 16.24356 | 272 | 294.3773 | 252.0509 | 522 | 552.5138 | 493.9144 |

| 22 | 29.32027 | 17.10758 | 273 | 295.416 | 253.0122 | 523 | 553.5418 | 494.8864 |

| 23 | 30.4533 | 17.97457 | 274 | 296.4547 | 253.9735 | 524 | 554.5698 | 495.8584 |

| 24 | 31.58356 | 18.84432 | 275 | 297.4934 | 254.9349 | 525 | 555.5978 | 496.8304 |

| 25 | 32.71121 | 19.71669 | 276 | 298.5319 | 255.8963 | 526 | 556.6257 | 497.8025 |

| 26 | 33.83639 | 20.59152 | 277 | 299.5704 | 256.8578 | 527 | 557.6536 | 498.7746 |

| 27 | 34.95926 | 21.46867 | 278 | 300.6088 | 257.8194 | 528 | 558.6815 | 499.7467 |

| 28 | 36.07992 | 22.34801 | 279 | 301.6472 | 258.781 | 529 | 559.7094 | 500.7189 |

| 29 | 37.1985 | 23.22944 | 280 | 302.6855 | 259.7428 | 530 | 560.7372 | 501.691 |

| 30 | 38.3151 | 24.11285 | 281 | 303.7237 | 260.7046 | 531 | 561.765 | 502.6632 |

| 31 | 39.42982 | 24.99815 | 282 | 304.7618 | 261.6664 | 532 | 562.7928 | 503.6355 |

| 32 | 40.54274 | 25.88523 | 283 | 305.7999 | 262.6283 | 533 | 563.8205 | 504.6077 |

| 33 | 41.65395 | 26.77403 | 284 | 306.8379 | 263.5903 | 534 | 564.8482 | 505.58 |

| 34 | 42.76352 | 27.66447 | 285 | 307.8758 | 264.5524 | 535 | 565.8759 | 506.5523 |

| 35 | 43.87152 | 28.55647 | 286 | 308.9137 | 265.5145 | 536 | 566.9036 | 507.5246 |

| 36 | 44.97802 | 29.44998 | 287 | 309.9515 | 266.4767 | 537 | 567.9313 | 508.497 |

| 37 | 46.08308 | 30.34493 | 288 | 310.9893 | 267.439 | 538 | 568.9589 | 509.4694 |

| 38 | 47.18676 | 31.24126 | 289 | 312.0269 | 268.4013 | 539 | 569.9865 | 510.4418 |

| 39 | 48.2891 | 32.13892 | 290 | 313.0646 | 269.3637 | 540 | 571.014 | 511.4142 |

| 40 | 49.39016 | 33.03786 | 291 | 314.1021 | 270.3261 | 541 | 572.0416 | 512.3866 |

| 41 | 50.48999 | 33.93804 | 292 | 315.1396 | 271.2886 | 542 | 573.0691 | 513.3591 |

| 42 | 51.58863 | 34.83941 | 293 | 316.177 | 272.2512 | 543 | 574.0966 | 514.3316 |

| 43 | 52.68612 | 35.74192 | 294 | 317.2144 | 273.2138 | 544 | 575.1241 | 515.3042 |

| 44 | 53.7825 | 36.64555 | 295 | 318.2517 | 274.1765 | 545 | 576.1515 | 516.2767 |

| 45 | 54.87781 | 37.55024 | 296 | 319.2889 | 275.1393 | 546 | 577.1789 | 517.2493 |

| 46 | 55.97209 | 38.45597 | 297 | 320.3261 | 276.1021 | 547 | 578.2063 | 518.2219 |

| 47 | 57.06535 | 39.36271 | 298 | 321.3632 | 277.065 | 548 | 579.2337 | 519.1945 |

| 48 | 58.15765 | 40.27042 | 299 | 322.4002 | 278.028 | 549 | 580.261 | 520.1672 |

| 49 | 59.249 | 41.17907 | 300 | 323.4372 | 278.991 | 550 | 581.2884 | 521.1399 |

| 50 | 60.33944 | 42.08863 | 301 | 324.4741 | 279.9541 | 551 | 582.3156 | 522.1126 |

| 51 | 61.42899 | 42.99909 | 302 | 325.511 | 280.9172 | 552 | 583.3429 | 523.0853 |

| 52 | 62.51768 | 43.9104 | 303 | 326.5478 | 281.8804 | 553 | 584.3702 | 524.0581 |

| 53 | 63.60553 | 44.82255 | 304 | 327.5845 | 282.8437 | 554 | 585.3974 | 525.0309 |

| 54 | 64.69257 | 45.73552 | 305 | 328.6212 | 283.807 | 555 | 586.4246 | 526.0037 |

| 55 | 65.77881 | 46.64928 | 306 | 329.6578 | 284.7704 | 556 | 587.4517 | 526.9765 |

| 56 | 66.86429 | 47.5638 | 307 | 330.6944 | 285.7338 | 557 | 588.4789 | 527.9493 |

| 57 | 67.94901 | 48.47908 | 308 | 331.7309 | 286.6973 | 558 | 589.506 | 528.9222 |

| 58 | 69.033 | 49.39509 | 309 | 332.7673 | 287.6609 | 559 | 590.5331 | 529.8951 |

| 59 | 70.11628 | 50.31182 | 310 | 333.8037 | 288.6245 | 560 | 591.5602 | 530.8681 |

| 60 | 71.19887 | 51.22923 | 311 | 334.84 | 289.5882 | 561 | 592.5872 | 531.841 |

| 61 | 72.28078 | 52.14733 | 312 | 335.8763 | 290.5519 | 562 | 593.6142 | 532.814 |

| 62 | 73.36203 | 53.06608 | 313 | 336.9125 | 291.5157 | 563 | 594.6412 | 533.787 |

| 63 | 74.44263 | 53.98548 | 314 | 337.9486 | 292.4796 | 564 | 595.6682 | 534.76 |

| 64 | 75.5226 | 54.90551 | 315 | 338.9847 | 293.4435 | 565 | 596.6952 | 535.7331 |

| 65 | 76.60196 | 55.82616 | 316 | 340.0208 | 294.4074 | 566 | 597.7221 | 536.7061 |

| 66 | 77.68071 | 56.74741 | 317 | 341.0568 | 295.3715 | 567 | 598.749 | 537.6792 |

| 67 | 78.75888 | 57.66924 | 318 | 342.0927 | 296.3355 | 568 | 599.7759 | 538.6523 |

| 68 | 79.83647 | 58.59165 | 319 | 343.1285 | 297.2997 | 569 | 600.8028 | 539.6255 |

| 69 | 80.9135 | 59.51463 | 320 | 344.1643 | 298.2639 | 570 | 601.8296 | 540.5986 |

| 70 | 81.98997 | 60.43815 | 321 | 345.2001 | 299.2281 | 571 | 602.8564 | 541.5718 |

| 71 | 83.06591 | 61.36221 | 322 | 346.2358 | 300.1924 | 572 | 603.8832 | 542.545 |

| 72 | 84.14132 | 62.2868 | 323 | 347.2714 | 301.1568 | 573 | 604.9099 | 543.5183 |

| 73 | 85.21622 | 63.21191 | 324 | 348.307 | 302.1212 | 574 | 605.9367 | 544.4915 |

| 74 | 86.29061 | 64.13753 | 325 | 349.3426 | 303.0857 | 575 | 606.9634 | 545.4648 |

| 75 | 87.3645 | 65.06364 | 326 | 350.378 | 304.0502 | 576 | 607.9901 | 546.4381 |

| 76 | 88.4379 | 65.99023 | 327 | 351.4135 | 305.0148 | 577 | 609.0168 | 547.4115 |

| 77 | 89.51083 | 66.91731 | 328 | 352.4488 | 305.9794 | 578 | 610.0434 | 548.3848 |

| 78 | 90.58329 | 67.84485 | 329 | 353.4842 | 306.9441 | 579 | 611.07 | 549.3582 |

| 79 | 91.65529 | 68.77285 | 330 | 354.5194 | 307.9088 | 580 | 612.0966 | 550.3316 |

| 80 | 92.72684 | 69.7013 | 331 | 355.5546 | 308.8736 | 581 | 613.1232 | 551.305 |

| 81 | 93.79795 | 70.63019 | 332 | 356.5898 | 309.8384 | 582 | 614.1498 | 552.2785 |

| 82 | 94.86863 | 71.55951 | 333 | 357.6249 | 310.8033 | 583 | 615.1763 | 553.2519 |

| 83 | 95.93888 | 72.48927 | 334 | 358.6599 | 311.7683 | 584 | 616.2028 | 554.2254 |

| 84 | 97.00871 | 73.41944 | 335 | 359.6949 | 312.7333 | 585 | 617.2293 | 555.1989 |

| 85 | 98.07813 | 74.35002 | 336 | 360.7299 | 313.6983 | 586 | 618.2558 | 556.1725 |

| 86 | 99.14714 | 75.281 | 337 | 361.7648 | 314.6634 | 587 | 619.2822 | 557.146 |

| 87 | 100.2158 | 76.21239 | 338 | 362.7996 | 315.6286 | 588 | 620.3086 | 558.1196 |

| 88 | 101.284 | 77.14416 | 339 | 363.8344 | 316.5938 | 589 | 621.335 | 559.0932 |

| 89 | 102.3518 | 78.07631 | 340 | 364.8692 | 317.5591 | 590 | 622.3614 | 560.0668 |

| 90 | 103.4193 | 79.00885 | 341 | 365.9038 | 318.5244 | 591 | 623.3878 | 561.0405 |

| 91 | 104.4864 | 79.94175 | 342 | 366.9385 | 319.4897 | 592 | 624.4141 | 562.0141 |

| 92 | 105.5531 | 80.87502 | 343 | 367.9731 | 320.4552 | 593 | 625.4404 | 562.9878 |

| 93 | 106.6195 | 81.80865 | 344 | 369.0076 | 321.4206 | 594 | 626.4667 | 563.9615 |

| 94 | 107.6855 | 82.74263 | 345 | 370.0421 | 322.3861 | 595 | 627.493 | 564.9353 |

| 95 | 108.7512 | 83.67695 | 346 | 371.0765 | 323.3517 | 596 | 628.5192 | 565.909 |

| 96 | 109.8165 | 84.61162 | 347 | 372.1109 | 324.3173 | 597 | 629.5454 | 566.8828 |

| 97 | 110.8815 | 85.54663 | 348 | 373.1453 | 325.283 | 598 | 630.5716 | 567.8566 |

| 98 | 111.9462 | 86.48197 | 349 | 374.1796 | 326.2487 | 599 | 631.5978 | 568.8304 |

| 99 | 113.0105 | 87.41764 | 350 | 375.2138 | 327.2144 | 600 | 632.624 | 569.8043 |

| 100 | 114.0745 | 88.35362 | 351 | 376.248 | 328.1802 | 601 | 633.6501 | 570.7781 |

| 101 | 115.1382 | 89.28993 | 352 | 377.2821 | 329.1461 | 602 | 634.6762 | 571.752 |

| 102 | 116.2016 | 90.22655 | 353 | 378.3162 | 330.112 | 603 | 635.7023 | 572.7259 |

| 103 | 117.2647 | 91.16347 | 354 | 379.3503 | 331.078 | 604 | 636.7284 | 573.6999 |

| 104 | 118.3275 | 92.1007 | 355 | 380.3843 | 332.044 | 605 | 637.7544 | 574.6738 |

| 105 | 119.3899 | 93.03823 | 356 | 381.4182 | 333.01 | 606 | 638.7804 | 575.6478 |

| 106 | 120.4521 | 93.97605 | 357 | 382.4521 | 333.9761 | 607 | 639.8064 | 576.6218 |

| 107 | 121.514 | 94.91416 | 358 | 383.486 | 334.9422 | 608 | 640.8324 | 577.5958 |

| 108 | 122.5756 | 95.85256 | 359 | 384.5198 | 335.9084 | 609 | 641.8584 | 578.5699 |

| 109 | 123.6369 | 96.79124 | 360 | 385.5536 | 336.8747 | 610 | 642.8843 | 579.5439 |

| 110 | 124.698 | 97.7302 | 361 | 386.5873 | 337.841 | 611 | 643.9102 | 580.518 |

| 111 | 125.7587 | 98.66944 | 362 | 387.6209 | 338.8073 | 612 | 644.9361 | 581.4921 |

| 112 | 126.8192 | 99.60895 | 363 | 388.6546 | 339.7737 | 613 | 645.962 | 582.4662 |

| 113 | 127.8794 | 100.5487 | 364 | 389.6881 | 340.7401 | 614 | 646.9879 | 583.4404 |

| 114 | 128.9394 | 101.4888 | 365 | 390.7217 | 341.7066 | 615 | 648.0137 | 584.4145 |

| 115 | 129.9991 | 102.4291 | 366 | 391.7552 | 342.6731 | 616 | 649.0395 | 585.3887 |

| 116 | 131.0586 | 103.3696 | 367 | 392.7886 | 343.6396 | 617 | 650.0653 | 586.3629 |

| 117 | 132.1177 | 104.3104 | 368 | 393.822 | 344.6062 | 618 | 651.0911 | 587.3372 |

| 118 | 133.1767 | 105.2515 | 369 | 394.8553 | 345.5729 | 619 | 652.1168 | 588.3114 |

| 119 | 134.2354 | 106.1928 | 370 | 395.8886 | 346.5396 | 620 | 653.1426 | 589.2857 |

| 120 | 135.2938 | 107.1344 | 371 | 396.9219 | 347.5063 | 621 | 654.1683 | 590.26 |

| 121 | 136.352 | 108.0762 | 372 | 397.9551 | 348.4731 | 622 | 655.194 | 591.2343 |

| 122 | 137.41 | 109.0182 | 373 | 398.9883 | 349.4399 | 623 | 656.2196 | 592.2086 |

| 123 | 138.4677 | 109.9605 | 374 | 400.0214 | 350.4068 | 624 | 657.2453 | 593.183 |

| 124 | 139.5252 | 110.903 | 375 | 401.0545 | 351.3737 | 625 | 658.2709 | 594.1573 |

| 125 | 140.5825 | 111.8457 | 376 | 402.0875 | 352.3407 | 626 | 659.2965 | 595.1317 |

| 126 | 141.6395 | 112.7887 | 377 | 403.1205 | 353.3077 | 627 | 660.3221 | 596.1061 |

| 127 | 142.6963 | 113.7318 | 378 | 404.1535 | 354.2748 | 628 | 661.3477 | 597.0806 |

| 128 | 143.7529 | 114.6753 | 379 | 405.1864 | 355.2419 | 629 | 662.3732 | 598.055 |

| 129 | 144.8093 | 115.6189 | 380 | 406.2192 | 356.209 | 630 | 663.3987 | 599.0295 |

| 130 | 145.8655 | 116.5627 | 381 | 407.252 | 357.1762 | 631 | 664.4242 | 600.004 |

| 131 | 146.9214 | 117.5068 | 382 | 408.2848 | 358.1434 | 632 | 665.4497 | 600.9785 |

| 132 | 147.9771 | 118.4511 | 383 | 409.3176 | 359.1107 | 633 | 666.4752 | 601.953 |

| 133 | 149.0326 | 119.3955 | 384 | 410.3503 | 360.078 | 634 | 667.5006 | 602.9276 |

| 134 | 150.088 | 120.3402 | 385 | 411.3829 | 361.0453 | 635 | 668.5261 | 603.9022 |

| 135 | 151.1431 | 121.2851 | 386 | 412.4155 | 362.0127 | 636 | 669.5515 | 604.8768 |

| 136 | 152.198 | 122.2302 | 387 | 413.4481 | 362.9802 | 637 | 670.5768 | 605.8514 |

| 137 | 153.2527 | 123.1755 | 388 | 414.4806 | 363.9476 | 638 | 671.6022 | 606.826 |

| 138 | 154.3072 | 124.121 | 389 | 415.5131 | 364.9152 | 639 | 672.6276 | 607.8007 |

| 139 | 155.3615 | 125.0667 | 390 | 416.5455 | 365.8827 | 640 | 673.6529 | 608.7754 |

| 140 | 156.4156 | 126.0126 | 391 | 417.5779 | 366.8503 | 641 | 674.6782 | 609.7501 |

| 141 | 157.4695 | 126.9586 | 392 | 418.6103 | 367.818 | 642 | 675.7035 | 610.7248 |

| 142 | 158.5233 | 127.9049 | 393 | 419.6426 | 368.7856 | 643 | 676.7287 | 611.6995 |

| 143 | 159.5768 | 128.8514 | 394 | 420.6749 | 369.7534 | 644 | 677.754 | 612.6743 |

| 144 | 160.6302 | 129.798 | 395 | 421.7071 | 370.7211 | 645 | 678.7792 | 613.649 |

| 145 | 161.6834 | 130.7448 | 396 | 422.7393 | 371.689 | 646 | 679.8044 | 614.6238 |

| 146 | 162.7364 | 131.6918 | 397 | 423.7714 | 372.6568 | 647 | 680.8296 | 615.5986 |

| 147 | 163.7892 | 132.639 | 398 | 424.8035 | 373.6247 | 648 | 681.8548 | 616.5735 |

| 148 | 164.8418 | 133.5864 | 399 | 425.8356 | 374.5926 | 649 | 682.8799 | 617.5483 |

| 149 | 165.8943 | 134.5339 | 400 | 426.8676 | 375.5606 | 650 | 683.905 | 618.5232 |

| 150 | 166.9465 | 135.4816 | 401 | 427.8996 | 376.5286 | 651 | 684.9302 | 619.4981 |

| 151 | 167.9987 | 136.4295 | 402 | 428.9316 | 377.4966 | 652 | 685.9552 | 620.473 |

| 152 | 169.0506 | 137.3776 | 403 | 429.9635 | 378.4647 | 653 | 686.9803 | 621.4479 |

| 153 | 170.1024 | 138.3258 | 404 | 430.9954 | 379.4329 | 654 | 688.0054 | 622.4229 |

| 154 | 171.154 | 139.2742 | 405 | 432.0272 | 380.401 | 655 | 689.0304 | 623.3978 |

| 155 | 172.2054 | 140.2228 | 406 | 433.059 | 381.3692 | 656 | 690.0554 | 624.3728 |

| 156 | 173.2567 | 141.1715 | 407 | 434.0907 | 382.3375 | 657 | 691.0804 | 625.3478 |

| 157 | 174.3078 | 142.1204 | 408 | 435.1225 | 383.3058 | 658 | 692.1054 | 626.3228 |

| 158 | 175.3587 | 143.0695 | 409 | 436.1541 | 384.2741 | 659 | 693.1304 | 627.2979 |

| 159 | 176.4095 | 144.0187 | 410 | 437.1858 | 385.2425 | 660 | 694.1553 | 628.2729 |

| 160 | 177.4601 | 144.9681 | 411 | 438.2174 | 386.2109 | 661 | 695.1802 | 629.248 |

| 161 | 178.5106 | 145.9176 | 412 | 439.2489 | 387.1793 | 662 | 696.2051 | 630.2231 |

| 162 | 179.5609 | 146.8673 | 413 | 440.2805 | 388.1478 | 663 | 697.23 | 631.1982 |

| 163 | 180.6111 | 147.8171 | 414 | 441.3119 | 389.1163 | 664 | 698.2549 | 632.1734 |

| 164 | 181.6611 | 148.7671 | 415 | 442.3434 | 390.0848 | 665 | 699.2797 | 633.1485 |

| 165 | 182.7109 | 149.7173 | 416 | 443.3748 | 391.0534 | 666 | 700.3045 | 634.1237 |

| 166 | 183.7606 | 150.6676 | 417 | 444.4062 | 392.0221 | 667 | 701.3293 | 635.0989 |

| 167 | 184.8102 | 151.618 | 418 | 445.4375 | 392.9907 | 668 | 702.3541 | 636.0741 |

| 168 | 185.8596 | 152.5686 | 419 | 446.4688 | 393.9594 | 669 | 703.3789 | 637.0493 |

| 169 | 186.9089 | 153.5193 | 420 | 447.5001 | 394.9282 | 670 | 704.4036 | 638.0246 |

| 170 | 187.958 | 154.4702 | 421 | 448.5313 | 395.8969 | 671 | 705.4284 | 638.9999 |

| 171 | 189.0069 | 155.4213 | 422 | 449.5625 | 396.8658 | 672 | 706.4531 | 639.9751 |

| 172 | 190.0558 | 156.3724 | 423 | 450.5936 | 397.8346 | 673 | 707.4778 | 640.9505 |

| 173 | 191.1045 | 157.3237 | 424 | 451.6247 | 398.8035 | 674 | 708.5025 | 641.9258 |

| 174 | 192.153 | 158.2752 | 425 | 452.6558 | 399.7724 | 675 | 709.5271 | 642.9011 |

| 175 | 193.2014 | 159.2268 | 426 | 453.6868 | 400.7414 | 676 | 710.5518 | 643.8765 |

| 176 | 194.2497 | 160.1785 | 427 | 454.7178 | 401.7104 | 677 | 711.5764 | 644.8518 |

| 177 | 195.2978 | 161.1304 | 428 | 455.7488 | 402.6794 | 678 | 712.601 | 645.8272 |

| 178 | 196.3458 | 162.0824 | 429 | 456.7797 | 403.6485 | 679 | 713.6256 | 646.8027 |

| 179 | 197.3937 | 163.0345 | 430 | 457.8106 | 404.6176 | 680 | 714.6501 | 647.7781 |

| 180 | 198.4414 | 163.9868 | 431 | 458.8415 | 405.5867 | 681 | 715.6747 | 648.7535 |

| 181 | 199.489 | 164.9392 | 432 | 459.8723 | 406.5559 | 682 | 716.6992 | 649.729 |

| 182 | 200.5365 | 165.8917 | 433 | 460.9031 | 407.5251 | 683 | 717.7237 | 650.7045 |

| 183 | 201.5839 | 166.8443 | 434 | 461.9338 | 408.4944 | 684 | 718.7482 | 651.68 |

| 184 | 202.6311 | 167.7971 | 435 | 462.9646 | 409.4637 | 685 | 719.7727 | 652.6555 |

| 185 | 203.6781 | 168.7501 | 436 | 463.9952 | 410.433 | 686 | 720.7972 | 653.6311 |

| 186 | 204.7251 | 169.7031 | 437 | 465.0259 | 411.4023 | 687 | 721.8216 | 654.6066 |

| 187 | 205.7719 | 170.6563 | 438 | 466.0565 | 412.3717 | 688 | 722.8461 | 655.5822 |

| 188 | 206.8186 | 171.6096 | 439 | 467.0871 | 413.3412 | 689 | 723.8705 | 656.5578 |

| 189 | 207.8652 | 172.563 | 440 | 468.1176 | 414.3106 | 690 | 724.8949 | 657.5334 |

| 190 | 208.9117 | 173.5165 | 441 | 469.1481 | 415.2801 | 691 | 725.9192 | 658.509 |

| 191 | 209.958 | 174.4702 | 442 | 470.1786 | 416.2496 | 692 | 726.9436 | 659.4847 |

| 192 | 211.0043 | 175.4239 | 443 | 471.209 | 417.2192 | 693 | 727.9679 | 660.4603 |

| 193 | 212.0504 | 176.3778 | 444 | 472.2394 | 418.1888 | 694 | 728.9922 | 661.436 |

| 194 | 213.0963 | 177.3319 | 445 | 473.2698 | 419.1584 | 695 | 730.0165 | 662.4117 |

| 195 | 214.1422 | 178.286 | 446 | 474.3001 | 420.1281 | 696 | 731.0408 | 663.3874 |

| 196 | 215.1879 | 179.2403 | 447 | 475.3304 | 421.0978 | 697 | 732.0651 | 664.3631 |

| 197 | 216.2336 | 180.1946 | 448 | 476.3607 | 422.0675 | 698 | 733.0893 | 665.3389 |

| 198 | 217.2791 | 181.1491 | 449 | 477.3909 | 423.0373 | 699 | 734.1136 | 666.3147 |

| 199 | 218.3245 | 182.1037 | 450 | 478.4211 | 424.0071 | 700 | 735.1378 | 667.2904 |

| 200 | 219.3698 | 183.0584 | 451 | 479.4513 | 424.9769 | 701 | 736.162 | 668.2662 |

| 201 | 220.415 | 184.0133 | 452 | 480.4814 | 425.9468 | 702 | 737.1862 | 669.2421 |

| 202 | 221.46 | 184.9682 | 453 | 481.5115 | 426.9167 | 703 | 738.2103 | 670.2179 |

| 203 | 222.505 | 185.9232 | 454 | 482.5416 | 427.8866 | 704 | 739.2345 | 671.1938 |

| 204 | 223.5498 | 186.8784 | 455 | 483.5716 | 428.8566 | 705 | 740.2586 | 672.1696 |

| 205 | 224.5945 | 187.8337 | 456 | 484.6016 | 429.8266 | 706 | 741.2827 | 673.1455 |

| 206 | 225.6392 | 188.789 | 457 | 485.6316 | 430.7966 | 707 | 742.3068 | 674.1214 |

| 207 | 226.6837 | 189.7445 | 458 | 486.6615 | 431.7667 | 708 | 743.3309 | 675.0973 |

| 208 | 227.7281 | 190.7001 | 459 | 487.6914 | 432.7368 | 709 | 744.355 | 676.0733 |

| 209 | 228.7724 | 191.6558 | 460 | 488.7213 | 433.7069 | 710 | 745.379 | 677.0492 |

| 210 | 229.8166 | 192.6116 | 461 | 489.7511 | 434.6771 | 711 | 746.403 | 678.0252 |

| 211 | 230.8607 | 193.5675 | 462 | 490.781 | 435.6473 | 712 | 747.427 | 679.0012 |

| 212 | 231.9047 | 194.5235 | 463 | 491.8107 | 436.6175 | 713 | 748.451 | 679.9772 |

| 213 | 232.9485 | 195.4797 | 464 | 492.8405 | 437.5878 | 714 | 749.475 | 680.9532 |

| 214 | 233.9923 | 196.4359 | 465 | 493.8702 | 438.5581 | 715 | 750.499 | 681.9293 |

| 215 | 235.036 | 197.3922 | 466 | 494.8999 | 439.5284 | 716 | 751.5229 | 682.9053 |

| 216 | 236.0796 | 198.3486 | 467 | 495.9295 | 440.4987 | 717 | 752.5468 | 683.8814 |

| 217 | 237.1231 | 199.3051 | 468 | 496.9591 | 441.4691 | 718 | 753.5708 | 684.8575 |

| 218 | 238.1664 | 200.2618 | 469 | 497.9887 | 442.4395 | 719 | 754.5946 | 685.8336 |

| 219 | 239.2097 | 201.2185 | 470 | 499.0182 | 443.41 | 720 | 755.6185 | 686.8097 |

| 220 | 240.2529 | 202.1753 | 471 | 500.0478 | 444.3805 | 721 | 756.6424 | 687.7859 |

| 221 | 241.296 | 203.1322 | 472 | 501.0773 | 445.351 | 722 | 757.6662 | 688.762 |

| 222 | 242.339 | 204.0892 | 473 | 502.1067 | 446.3215 | 723 | 758.6901 | 689.7382 |

| 223 | 243.3819 | 205.0463 | 474 | 503.1361 | 447.2921 | 724 | 759.7139 | 690.7144 |

| 224 | 244.4247 | 206.0035 | 475 | 504.1655 | 448.2627 | 725 | 760.7377 | 691.6906 |

| 225 | 245.4674 | 206.9608 | 476 | 505.1949 | 449.2333 | 726 | 761.7614 | 692.6668 |

| 226 | 246.51 | 207.9182 | 477 | 506.2242 | 450.204 | 727 | 762.7852 | 693.643 |

| 227 | 247.5525 | 208.8757 | 478 | 507.2535 | 451.1747 | 728 | 763.8089 | 694.6193 |

| 228 | 248.5949 | 209.8333 | 479 | 508.2828 | 452.1454 | 729 | 764.8327 | 695.5956 |

| 229 | 249.6372 | 210.791 | 480 | 509.312 | 453.1162 | 730 | 765.8564 | 696.5718 |

| 230 | 250.6795 | 211.7488 | 481 | 510.3413 | 454.087 | 731 | 766.8801 | 697.5482 |

| 231 | 251.7216 | 212.7066 | 482 | 511.3704 | 455.0578 | 732 | 767.9038 | 698.5245 |

| 232 | 252.7636 | 213.6646 | 483 | 512.3996 | 456.0287 | 733 | 768.9274 | 699.5008 |

| 233 | 253.8056 | 214.6226 | 484 | 513.4287 | 456.9995 | 734 | 769.9511 | 700.4772 |

| 234 | 254.8475 | 215.5807 | 485 | 514.4578 | 457.9704 | 735 | 770.9747 | 701.4535 |

| 235 | 255.8893 | 216.539 | 486 | 515.4869 | 458.9414 | 736 | 771.9983 | 702.4299 |

| 236 | 256.931 | 217.4973 | 487 | 516.5159 | 459.9123 | 737 | 773.0219 | 703.4063 |

| 237 | 257.9726 | 218.4557 | 488 | 517.5449 | 460.8833 | 738 | 774.0455 | 704.3827 |

| 238 | 259.0141 | 219.4141 | 489 | 518.5739 | 461.8544 | 739 | 775.0691 | 705.3592 |

| 239 | 260.0555 | 220.3727 | 490 | 519.6028 | 462.8254 | 740 | 776.0926 | 706.3356 |

| 240 | 261.0969 | 221.3314 | 491 | 520.6317 | 463.7965 | 741 | 777.1162 | 707.3121 |

| 241 | 262.1381 | 222.2901 | 492 | 521.6606 | 464.7676 | 742 | 778.1397 | 708.2885 |

| 242 | 263.1793 | 223.2489 | 493 | 522.6894 | 465.7388 | 743 | 779.1632 | 709.265 |

| 243 | 264.2204 | 224.2078 | 494 | 523.7183 | 466.71 | 744 | 780.1867 | 710.2416 |

| 244 | 265.2614 | 225.1668 | 495 | 524.7471 | 467.6812 | 745 | 781.2102 | 711.2181 |

| 245 | 266.3023 | 226.1259 | 496 | 525.7758 | 468.6524 | 746 | 782.2336 | 712.1946 |

| 246 | 267.3431 | 227.0851 | 497 | 526.8046 | 469.6237 | 747 | 783.2571 | 713.1712 |

| 247 | 268.3839 | 228.0443 | 498 | 527.8333 | 470.595 | 748 | 784.2805 | 714.1478 |

| 248 | 269.4246 | 229.0037 | 499 | 528.862 | 471.5663 | 749 | 785.3039 | 715.1243 |

| 249 | 270.4652 | 229.9631 | 500 | 529.8906 | 472.5376 | 750 | 786.3273 | 716.101 |

Table 5-2 Plot of values from Table 5-1

5.3.3 Reliability

All tests executed during conformity assessment SHALL be considered "pertinent" for assessment of reliability, with the following exceptions:

- Tests in which failures are forced;

- Tests in which portions of the system that would be exercised during an actual election are bypassed (see Part 3: 2.5.3 “Test fixtures”).

Applies to: Voting system

The test lab SHALL record the number of failures and the applicable measure of volume for each pertinent test execution, for each type of device, and for each applicable failure type in Part 1: Table 6-3 (Part 1: 6.3.1.5 “Requirements”).

Applies to: Voting device

DISCUSSION

"Type of device" refers to the different models produced by the manufacturer. These are not the same as device classes. The system may include several different models of the same class, and a given model may belong to more than one class.

When operational testing is complete, the test lab SHALL calculate the failure total and total volume accumulated across all pertinent tests for each type of device and failure type. If, using the test method in Part 3: 5.3.1 “General method”, these values indicate rejection of the null hypothesis for any type of device and type of failure, the verdict on conformity to Requirement Part 1: 6.3.1.5-A SHALL be Fail. Otherwise, the verdict SHALL be Pass.

Applies to: Voting device

5.3.4 Accuracy

The informal concept of voting system accuracy is formalized using the ratio of the number of errors that occur to the volume of data processed, also known as error rate.

All tests executed during conformity assessment SHALL be considered "pertinent" for assessment of accuracy, with the following exceptions:

- Tests in which errors are forced;

- Tests in which portions of the system that would be exercised during an actual election are bypassed (see Part 3: 2.5.3 “Test fixtures”).

Applies to: Voting system

Given a set of vote data reports resulting from the execution of tests, the observed cumulative report total error rate SHALL be calculated as follows:

- Define a "report item" as any one of the numeric values (totals or counts) that must appear in any of the vote data reports. Each ballot count, each vote, overvote, and undervote total for each contest, and each vote total for each contest choice in each contest is a separate report item. The required report items are detailed in Part 1: 7.8.3 “Vote data reports”;

- For each report item, compute the "report item error" as the absolute value of the difference between the correct value and the reported value. Special cases: If a value is reported that should not have appeared at all (spurious item), or if an item that should have appeared in the report does not (missing item), assess a report item error of one. Additional values that are reported as a manufacturer extension to the standard are not considered spurious items;

- Compute the "report total error" as the sum of all of the report item errors from all of the reports;

- Compute the "report total volume" as the sum of all of the correct values for all of the report items that are supposed to appear in the reports. Special cases: When the same logical contest appears multiple times (e.g., when results are reported for each ballot configuration and then combined or when reports are generated for multiple reporting contexts), each manifestation of the logical contest is considered a separate contest with its own correct vote totals in this computation;

- Compute the observed cumulative report total error rate as the ratio of the report total error to the report total volume. Special cases: If both values are zero, the report total error rate is zero. If the report total volume is zero but the report total error is not, the report total error rate is infinite;

Applies to: Voting system

Source: Revision of [GPO90] F.6

The test lab SHALL record the report total error and report total volume for each pertinent test execution.

Applies to: Voting system

DISCUSSION

Accuracy is calculated as a system-level metric, not separated by device type.

When operational testing is complete, the test lab SHALL calculate the report total error and report total volume accumulated across all pertinent tests. If, using the test method in Part 3: 5.3.1 “General method”, these values indicate rejection of the null hypothesis, the verdict on conformity to Requirement Part 1: 6.3.2-B SHALL be Fail. Otherwise, the verdict SHALL be Pass.

Applies to: Voting system

5.3.5 Misfeed rate

This benchmark applies only to paper-based tabulators and EBMs. Multiple feeds, misfeeds (jams), and rejections of ballots that meet all manufacturer specifications are all treated collectively as "misfeeds" for benchmarking purposes (i.e., only a single count is maintained).

All tests executed during conformity assessment SHALL be considered "pertinent" for assessment of misfeed rate, with the following exceptions:

- Tests in which misfeeds are forced.

Applies to: Voting system

For paper-based tabulators and EBMs, the observed cumulative misfeed rate SHALL be calculated as follows: