| Monitoring of MTI Production and Usage |

| DCMS Indexer Recommendation Assessment Experiment |

| MTI User-Centered Evaluation (TBD) |

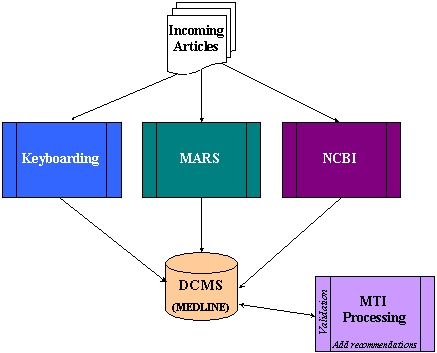

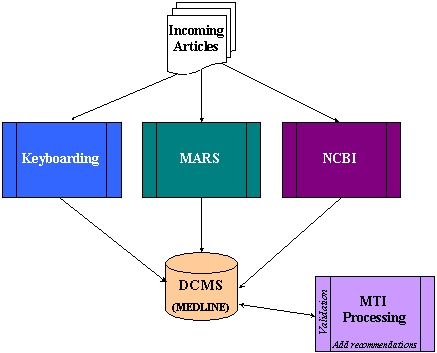

| MARS & MTI Test Bed |

| Last Modified: October 09, 2007 | ii-public | |||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||

|

Lister Hill National Center for Biomedical Communications |

|

U.S. National Library of Medicine |

|

National Institutes of Health | |||||||||||||||||||||||

|

|

Department of Health and Human Services | |||||||||||||||||||||||||||

|

||||||||||||||||||||||||||||