| Oct. 26, 2006

[NIST

Tech Beat Search] [Credits] [NIST Tech Beat

Archives] [Media

Contacts] [Subscription Information]

New Hybrid Microscope Probes Nano-Electronics

|

|

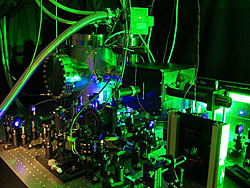

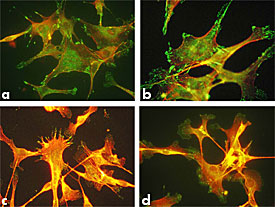

JILA's scanning photoionization microscope (SPIM) includes an optical microscope (in vacuum chamber, background) and an ultrafast laser (appears as blue, foreground). A false color SPIM image (b) reveals the same physical structure of a gold pattern on glass as an atomic force microscope image (a), but the high intensity regions in the SPIM image indicate that electron ejection is much more efficient at metal edge discontinuities.

Credit: O.L.A. Monti, T.A. Baker, and D.J. Nesbitt/JILA

View larger image of scanning photoionization microscope

View larger image of SPIM image |

A new form of scanning microscopy that simultaneously reveals physical and electronic profiles of metal nanostructures has been demonstrated at JILA, a joint institute of the National Institute of Standards and Technology (NIST) and University of Colorado at Boulder. The new instrument is expected to be particularly useful for analyzing the make-up and properties of nanoscale electronics and nanoparticles.

Scanning photoionization microscopy (SPIM), described in a new paper,* combines the high spatial resolution of optical microscopy with the high sensitivity to subtle electrical activity made possible by detecting the low-energy electrons emitted by a material as it is illuminated with laser pulses. The technique potentially could be used to make pictures of both electronic and physical patterns in devices such as nanostructured transistors or electrode sensors, or to identify chemicals or even elements in such structures.

“You make images by virtue of how readily electrons are photoejected from a material,” says NIST Fellow David Nesbitt, leader of the research group. “The method is in its infancy, but nevertheless it really does have the power to provide a new set of eyes for looking at nanostructured metals and semiconductors.”

The JILA-built apparatus includes a moving optical microscopy stage in a vacuum, an ultrafast near-ultraviolet laser beam that provides sufficient peak power to inject two photons (particles of light) into a metal at virtually the same time, and equipment for measuring the numbers and energy of electrons ejected from the material. By comparing SPIM images of nanostructured gold films to scans using atomic force microscopy, which profiles surface topology, the researchers confirmed the correlations and physical mapping accuracy of the new technique. They also determined that lines in SPIM images correspond to spikes in electron energy, or current, and that contrast depends on the depth of electrons escaping from the metal as well as variations in material thickness.

Work is continuing to further develop the method, which may be able to make chemically specific images, for example, if the lasers are tuned to different colors to affect only one type of molecule at a time. The research is supported by the Air Force Office of Scientific Research and National Science Foundation.

* O.L.A. Monti, T.A. Baker and D.J. Nesbitt. 2006. Imaging nanostructures with scanning photoionization microscopy. Journal of Chemical Physics, October 21.

Media Contact:

Laura Ost, laura.ost@nist.gov, (301) 975-4034

Finding the Right Mix: A Biomaterial Blend Library

|

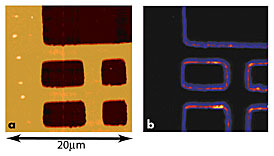

Series of microscopic images shows the influence of biomaterial blends on cell shape and adhesion properties. Images show the changes in actin (red), a structural protein related to cell shape, and vinculin (green), a protein used in cell adhesion for pure and blended samples of poly(DTE carbonate) and poly(DTO carbonate). (a) Pure DTE, (b) 50/50 DTE/DTO, (c) 30/70 DTE/DTO, (d) Pure DTO

Credit: NIST

View hi-resolution image |

From dental implants to hip replacements, biomaterials have become big business. But scientists pursuing this modern medical revolution share a basic challenge: biocompatibility. How will a biomaterial on the lab bench actually work inside the human body? Will a patient accept the new material or suffer an inflammatory response? And can that material survive in a human's complex system?

To tackle such questions, researchers at the National Institute of Standards and Technology (NIST) and the New Jersey Center for Biomaterials (NJCB) at Rutgers University have developed new methods to analyze the interactions between cells and biomaterials. Their work could lead to inexpensive techniques for building better biomaterials.

Polymers derived from the amino acid tyrosine make up a broad class of degradable biomaterials under investigation. Such materials provide a temporary scaffold for cells to grow and tissue to regenerate. In a 2006 study* presented at the national meeting of the American Chemical Society in September, the researchers analyzed how two types of model cells—immune cells known as macrophages and bone cells known as osteoblasts—responded to changes in the composition of thin films made of these tyrosine-derived polymers. In practice, many biomaterials are made from blends of polymers to achieve specific material properties. Optimizing the blend composition is often a difficult and time-consuming task. As the blends gained a higher or lower proportion of a respective material, the cells around them react by changing shape, ultimately increasing or decreasing contact with the films. In the body, such cell-material dynamics are critically important to the outcome—determining whether a biomaterial leads to inflammation or abnormal cell growth, for example.

The new study represents an innovative line of research. Working with NJCB, NIST scientists have developed a method for constructing “scaffold libraries” —collections of biomaterial scaffolds made from controlled polymer blend compositions. The library currently contains scaffolds made from blends of poly(DTE carbonate) and poly(DTO carbonate). Ultimately, Becker says, the goal is to develop rapid, inexpensive methods to predict the behavior in the body of any of thousands of possible tyrosine-derived blends.

The work is funded in part by a National Institutes of Health grant.

*L.O. Bailey, M.L. Becker et al. Cellular responses to phase-separated blends of tyrosine-derived polycarbonates. Journal of Biomed. Mater. Res. Part A. March 1, 2006.

Media Contact:

Mark Bello, mark.bello@nist.gov, (301) 975-3776

Novel Atom Refining Boosts Entanglement of Atom Pairs

Physicists at the National Institute of Standards and Technology (NIST) have demonstrated a method for “refining” entangled atom pairs (a process called purification) so they can be more useful in quantum computers and communications systems. The advance is a significant step toward transforming quantum entanglement—an atomic-scale phenomenon described by Albert Einstein as “spooky action at a distance”—into a practical tool for applications such as “unbreakable” data encryption.

The NIST work, reported in the Oct. 19, 2006, issue of Nature,* marks the first time atoms have been both entangled and subsequently purified; previously, this process had been carried out only with entangled photons (particles of light). This also is the first time that scientists have been able to purify particles non-destructively. Since direct measurement destroys the entangled state under quantum rules, the experiment avoids this by entangling two pairs of atoms and measuring only one pair.

Entanglement is a curious property of quantum physics that links the condition and behavior of two or more particles, such as atoms or photons. Entanglement could have many uses in large quantum computers and networks. For example, it is required for “teleportation” of information (www.nist.gov/public_affairs/releases/teleportation.htm), a process that could be used to rapidly transfer data between separate locations in a quantum computer, or to detect and correct minor operational errors (www.nist.gov/public_affairs/releases/quantum_computers.htm). Entangled photons are used in various forms of quantum cryptography, and are the clear choice for long-distance communication.

The quality of the entanglement can be degraded by many environmental factors, such as fluctuating magnetic fields, so the process and the transport of entangled particles need to be tightly controlled in technological applications. The purification process implemented at NIST can clean up or remove any distortions or “noise” regardless of the source by processing two or more noisy entangled pairs to obtain one entangled pair of higher purity.

Funding for the research was provided in part by the Disruptive Technology Office, an agency of the U.S. intelligence community that funds unclassified research on information systems. For more details, see www.nist.gov/public_affairs/releases/purification.html.

* R. Reichle, D. Leibfried, E. Knill, J. Britton, R.B. Blakestad, J.D. Jost, C. Langer, R. Ozeri, S. Seidelin and D.J. Wineland. Experimental purification of two-atom entanglement. Nature. Oct. 19, 2006.

Media Contact:

Laura Ost, laura.ost@nist.gov, (301) 975-4034

Turning a Nuclear Spotlight on Illegal Weapons Material

Researchers at the National Institute of Standards and Technology (NIST) and Oak Ridge National Laboratory (ORNL) have demonstrated that they can cheaply, quickly and accurately identify even subnanogram amounts of weapon-grade plutonium and uranium. Their work was presented in September at the national meeting of the American Chemical Society.*

Worldwide, most nuclear facilities generate electricity or produce neutrons for peaceful research—but they also can create materials for nuclear weapons. International inspectors routinely tour such facilities, taking cloth wipe samples of equipment surfaces for forensic analysis of any potential weapon-grade materials in suspicious locations. In particular, they search for specific uranium or plutonium isotopes capable of setting off a nuclear explosion.

NIST chemists working at the NIST Center for Neutron Research have applied a highly sensitive technique called delayed neutron activation analysis to improve such efforts, the NIST and ORNL researchers report. The center includes a specially designed research neutron source, which bathes material samples with low-energy neutrons. Next, those samples rapidly go into a barrel-shaped instrument, embedded with neutron detectors, which precisely count the neutrons emitted over a short period of time. The neutron count acts as a unique signature of special nuclear material. In their study, the scientists used this technique to successfully identify trace amounts of uranium-235 and plutonium-239 in less than three minutes.

“We’re emphasizing the technique now because world events have made it more critical to detect traces of nuclear materials, which is technically very challenging,” says analytical chemist Richard Lindstrom, co-author of the ACS presentation. This tool also complements a variety of other sophisticated methods used by NIST researchers working on homeland security.

The low detection levels are due in part to the use of the NIST neutron source, which is particularly well designed for this task. The technique can detect weapon-grade material just four microns in diameter - less than a tenth the size of a human hair. The technique could be used to find subtle, lingering radioactive material in samples taken during inspection of trucks or cargo shipping containers, for instance. Beyond forensics, NIST uses the technique for measurements of isotopes in research and for industrial projects. The team is now working to automate the counting instrument and simplifying its operation for rapidly handling large batches of samples.

*R. M. Lindstrom, D.C. Glasgow and R.G. Downing. Trace fissile measurement by delayed neutron activation analysis at NIST. Presented at the 232nd ACS National Meeting, San Francisco, Calif., Sept 10, 2006.

Media Contact:

Michael Baum, michael.baum@nist.gov, (301) 975-2763

New Web-based System Leads to Better, More Timely Data

After two years of work, an innovative project using Web-based technologies to speed researcher access to a large body of new scientific data has demonstrated that not only access to but also the quality of the data has improved markedly. A new paper* on the Web-enabled ThermoML thermodynamics global data exchange standard notes that the data-entry process catches and corrects data errors in roughly 10 percent of journal articles entered in the system.

A landmark partnership between the National Institute of Standards and Technology (NIST), several major scientific journals and the International Union of Pure and Applied Chemistry (IUPAC), ThermoML was developed to deal with the explosive growth in published data on thermodynamics. Thermodynamics is essential to understanding and designing chemical reactions in everything from huge industrial chemical plants to the biochemistry of individual cells in the body. With improvements in measurement technology, the quantity of published thermophysical and thermochemical data has been almost doubling every 10 years.

This vast flood of information not only presents a basic problem for researchers and engineers—how to find the data they need when they need it.— but also has strained the traditional scientific peer-review and validation process. “Despite the peer-review process, problems in data validation have led, in many instances, to publication of data that are grossly erroneous and, at times, inconsistent with the fundamental laws of nature,” the authors note.

The ThermoML project began as an attempt to simplify and speed the delivery of new thermodynamic data from producers to users. The system has three major components —

- ThermoML itself, an IUPAC data format standard based on XML (a generic data formatting standard) customized for storing thermodynamic data;

- Software tools developed at the NIST Thermodynamic Research Center (TRC) to simplify entering data into the system in formats close to those used by the original journal documents, displaying it in various formats and performing basic data integrity checks; and

- The ThermoData Engine, a sophisticated expert system developed at NIST, that can generate on demand recommended, evaluated data based on the existing experimental and predicted data and their uncertainties.

Authors writing for five major journals that are partners in the program, the Journal of Chemical and Engineering Data, the Journal of Chemical Thermodynamics, Fluid Phase Equilibria, Thermochimica Acta, and the International Journal of Thermophysics, participate in the process by submitting the data for their articles using GDC software (available from NIST). The data are evaluated, and any potential inconsistencies reported back to the authors for verification. Based on two years of experience and some 1,000 articles, the authors write, an estimated 10 percent of articles reporting experimental thermodynamic data for organic compounds contain some erroneous information that would be “extremely difficult” to detect through the normal peer-review process.

More information on ThermoML can be found at http://trc.nist.gov/ThermoML.html.

*M. Frenkel et al. New global communication process in thermodynamics: impact on quality of published experimental data.. J. Chem. Inf. Model. ASAP Article. Web Release Date: October 11, 2006. http://pubs.acs.org/cgi-bin/abstract.cgi/jceaax/2003/48/i01/abs/je025645o.html

Media Contact:

Michael Baum, michael.baum@nist.gov, (301) 975-2763

Quick Links

O’Brian Named Director of NIST Boulder Laboratories

Thomas R. O’Brian, chief of the Time and Frequency Division at the National Institute of Standards and Technology (NIST), has been appointed director of the NIST Boulder Laboratories. As NIST Boulder Director, O’Brian is the senior site manager with oversight of the facilities and technical infrastructure for the agency’s 400 scientists, engineers, technicians and support staff. The NIST Boulder Laboratories are engaged in a wide range of research activities in measurement science, including time and frequency standards; electromagnetics, including superconductivity and electromagnetic fields; optoelectronics; chemical engineering; materials reliability; laser physics; quantum and optical physics; and computational methods. The labs have strong partnerships with U.S. industry, other government agencies and universities.

O’Brian earned a bachelor’s degree in chemistry from Washburn University in Topeka, Kansas, and a doctorate in experimental atomic physics from the University of Wisconsin in Madison. He joined NIST in 1991 as a National Research Council postdoctoral fellow in the NIST Physics Laboratory in Gaithersburg, Md., conducting research on the interactions of atoms with very strong laser light. O’Brian’s most recent scientific focus involved precision measurements using synchrotron radiation (light from electron accelerators).

Vulnerability Database Hits 20,000 Software-Flaw Mark

Established just a little over a year ago, the National Institute of Standards and Technology's National Vulnerability Database (NVD) now contains information on 20,000 computer system vulnerabilities, up from the original 12,000, and the Web site receives hits at a rate of 25 million per year. For those trying to prevent computer system attacks, keeping up with the hundreds of new vulnerabilities discovered each month can be an overwhelming task, especially since a single flaw can be known by numerous names. The NVD provides standard vulnerability names, integrates all publicly available U.S. government resources on vulnerabilities, provides links to industry resources, and provides standardized vulnerability impact scores using the Common Vulnerability Scoring System. This makes it easier to learn about new vulnerabilities and how to remediate them. NVD is updated daily and can be searched by a variety of vulnerability characteristics; including severity, vendor name, software name and version number. At the request of the software industry, in September, 2006, NIST established a forum on the NVD Web site for software vendors to comment on vulnerabilities in their products. NVD is sponsored by the Department of Homeland Security’s US-CERT and is based upon Common Vulnerabilities and Exposures (CVE) work from the MITRE Corporation. For more information, go to http://nvd.nist.gov/.

|